human

Papers with tag human

2022

- Learnable human mesh triangulation for 3D human pose and shape estimationSungho Chun, Sungbum Park, and Ju Yong ChangIn 2022

Compared to joint position, the accuracy of joint rotation and shapeestimation has received relatively little attention in the skinned multi-personlinear model (SMPL)-based human mesh reconstruction from multi-view images. Thework in this field is broadly classified into two categories. The firstapproach performs joint estimation and then produces SMPL parameters by fittingSMPL to resultant joints. The second approach regresses SMPL parametersdirectly from the input images through a convolutional neural network(CNN)-based model. However, these approaches suffer from the lack ofinformation for resolving the ambiguity of joint rotation and shapereconstruction and the difficulty of network learning. To solve theaforementioned problems, we propose a two-stage method. The proposed methodfirst estimates the coordinates of mesh vertices through a CNN-based model frominput images, and acquires SMPL parameters by fitting the SMPL model to theestimated vertices. Estimated mesh vertices provide sufficient information fordetermining joint rotation and shape, and are easier to learn than SMPLparameters. According to experiments using Human3.6M and MPI-INF-3DHP datasets,the proposed method significantly outperforms the previous works in terms ofjoint rotation and shape estimation, and achieves competitive performance interms of joint location estimation.

每个视角进行可见性判断再进行特征融合,最后接了拟合模块

human mv 1p SMPL e2e@inproceedings{lrmesh, title = {Learnable human mesh triangulation for 3D human pose and shape estimation}, author = {Chun, Sungho and Park, Sungbum and Chang, Ju Yong}, year = {2022}, tags = {human, mv, 1p, SMPL, e2e}, } - On Triangulation as a Form of Self-Supervision for 3D Human Pose EstimationSoumava Kumar Roy, Leonardo Citraro, Sina Honari, and Pascal FuaIn 2022

Supervised approaches to 3D pose estimation from single images are remarkablyeffective when labeled data is abundant. However, as the acquisition ofground-truth 3D labels is labor intensive and time consuming, recent attentionhas shifted towards semi- and weakly-supervised learning. Generating aneffective form of supervision with little annotations still poses majorchallenge in crowded scenes. In this paper we propose to impose multi-viewgeometrical constraints by means of a weighted differentiable triangulation anduse it as a form of self-supervision when no labels are available. We thereforetrain a 2D pose estimator in such a way that its predictions correspond to there-projection of the triangulated 3D pose and train an auxiliary network onthem to produce the final 3D poses. We complement the triangulation with aweighting mechanism that alleviates the impact of noisy predictions caused byself-occlusion or occlusion from other subjects. We demonstrate theeffectiveness of our semi-supervised approach on Human3.6M and MPI-INF-3DHPdatasets, as well as on a new multi-view multi-person dataset that featuresocclusion.

使用多视角三角化来自监督

human mv 1p 3dpose e2e self-supervised@inproceedings{2203.15865, title = {On Triangulation as a Form of Self-Supervision for 3D Human Pose Estimation}, author = {Roy, Soumava Kumar and Citraro, Leonardo and Honari, Sina and Fua, Pascal}, year = {2022}, tags = {human, mv, 1p, 3dpose, e2e, self-supervised}, } -

PPT: token-Pruned Pose Transformer for monocular and multi-view human pose estimationHaoyu Ma, Zhe Wang, Yifei Chen, Deying Kong, Liangjian Chen, Xingwei Liu, Xiangyi Yan, Hao Tang, and Xiaohui XieIn 2022

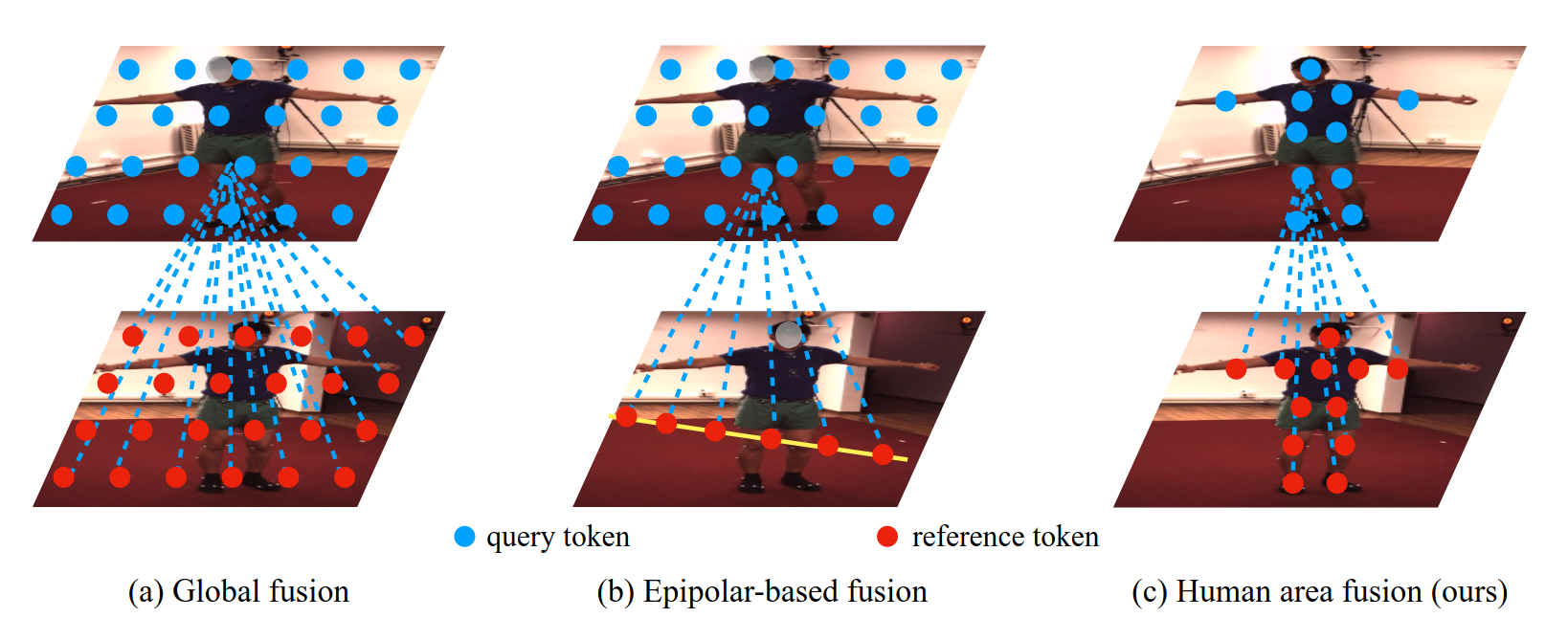

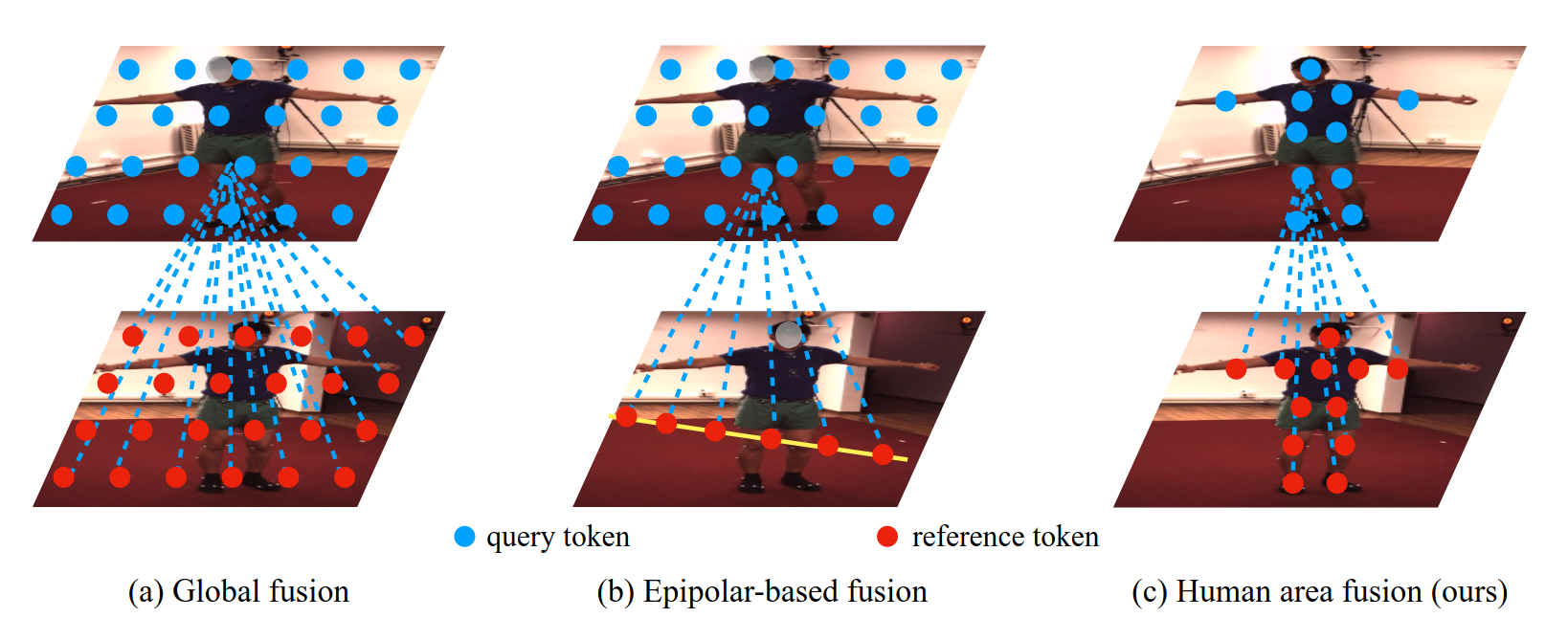

PPT: token-Pruned Pose Transformer for monocular and multi-view human pose estimationHaoyu Ma, Zhe Wang, Yifei Chen, Deying Kong, Liangjian Chen, Xingwei Liu, Xiangyi Yan, Hao Tang, and Xiaohui XieIn 2022Recently, the vision transformer and its variants have played an increasinglyimportant role in both monocular and multi-view human pose estimation.Considering image patches as tokens, transformers can model the globaldependencies within the entire image or across images from other views.However, global attention is computationally expensive. As a consequence, it isdifficult to scale up these transformer-based methods to high-resolutionfeatures and many views. In this paper, we propose the token-Pruned Pose Transformer (PPT) for 2Dhuman pose estimation, which can locate a rough human mask and performsself-attention only within selected tokens. Furthermore, we extend our PPT tomulti-view human pose estimation. Built upon PPT, we propose a new cross-viewfusion strategy, called human area fusion, which considers all human foregroundpixels as corresponding candidates. Experimental results on COCO and MPIIdemonstrate that our PPT can match the accuracy of previous pose transformermethods while reducing the computation. Moreover, experiments on Human 3.6M andSki-Pose demonstrate that our Multi-view PPT can efficiently fuse cues frommultiple views and achieve new state-of-the-art results.

使用人体区域来做fusion

human mv 1p 3dpose@inproceedings{PPT, title = {PPT: token-Pruned Pose Transformer for monocular and multi-view human pose estimation}, author = {Ma, Haoyu and Wang, Zhe and Chen, Yifei and Kong, Deying and Chen, Liangjian and Liu, Xingwei and Yan, Xiangyi and Tang, Hao and Xie, Xiaohui}, year = {2022}, tags = {human, mv, 1p, 3dpose}, } - VTP: Volumetric Transformer for Multi-view Multi-person 3D Pose EstimationYuxing Chen, Renshu Gu, Ouhan Huang, and Gangyong JiaIn 2022

This paper presents Volumetric Transformer Pose estimator (VTP), the first 3Dvolumetric transformer framework for multi-view multi-person 3D human poseestimation. VTP aggregates features from 2D keypoints in all camera views anddirectly learns the spatial relationships in the 3D voxel space in anend-to-end fashion. The aggregated 3D features are passed through 3Dconvolutions before being flattened into sequential embeddings and fed into atransformer. A residual structure is designed to further improve theperformance. In addition, the sparse Sinkhorn attention is empowered to reducethe memory cost, which is a major bottleneck for volumetric representations,while also achieving excellent performance. The output of the transformer isagain concatenated with 3D convolutional features by a residual design. Theproposed VTP framework integrates the high performance of the transformer withvolumetric representations, which can be used as a good alternative to theconvolutional backbones. Experiments on the Shelf, Campus and CMU Panopticbenchmarks show promising results in terms of both Mean Per Joint PositionError (MPJPE) and Percentage of Correctly estimated Parts (PCP). Our code willbe available.

使用3DVolTransformer回归人体坐标

human mv mp 3dpose transformer@inproceedings{VTP, title = {VTP: Volumetric Transformer for Multi-view Multi-person 3D Pose Estimation}, author = {Chen, Yuxing and Gu, Renshu and Huang, Ouhan and Jia, Gangyong}, year = {2022}, tags = {human, mv, mp, 3dpose, transformer}, } - Learned Vertex Descent: A New Direction for 3D Human Model FittingEnric Corona, Gerard Pons-Moll, Guillem Alenyà, and Francesc Moreno-NoguerIn 2022

We propose a novel optimization-based paradigm for 3D human model fitting onimages and scans. In contrast to existing approaches that directly regress theparameters of a low-dimensional statistical body model (e.g. SMPL) from inputimages, we train an ensemble of per-vertex neural fields network. The networkpredicts, in a distributed manner, the vertex descent direction towards theground truth, based on neural features extracted at the current vertexprojection. At inference, we employ this network, dubbed LVD, within agradient-descent optimization pipeline until its convergence, which typicallyoccurs in a fraction of a second even when initializing all vertices into asingle point. An exhaustive evaluation demonstrates that our approach is ableto capture the underlying body of clothed people with very different bodyshapes, achieving a significant improvement compared to state-of-the-art. LVDis also applicable to 3D model fitting of humans and hands, for which we show asignificant improvement to the SOTA with a much simpler and faster method.

一种新的姿态估计的框架

human monocular 1p SMPL@inproceedings{LVD, title = {Learned Vertex Descent: A New Direction for 3D Human Model Fitting}, author = {Corona, Enric and Pons-Moll, Gerard and Alenyà, Guillem and Moreno-Noguer, Francesc}, year = {2022}, tags = {human, monocular, 1p, SMPL}, } - SUPR: A Sparse Unified Part-Based Human RepresentationAhmed A. A. Osman, Timo Bolkart, Dimitrios Tzionas, and Michael J. BlackIn 2022

Statistical 3D shape models of the head, hands, and fullbody are widely usedin computer vision and graphics. Despite their wide use, we show that existingmodels of the head and hands fail to capture the full range of motion for theseparts. Moreover, existing work largely ignores the feet, which are crucial formodeling human movement and have applications in biomechanics, animation, andthe footwear industry. The problem is that previous body part models aretrained using 3D scans that are isolated to the individual parts. Such datadoes not capture the full range of motion for such parts, e.g. the motion ofhead relative to the neck. Our observation is that full-body scans provideimportant information about the motion of the body parts. Consequently, wepropose a new learning scheme that jointly trains a full-body model andspecific part models using a federated dataset of full-body and body-partscans. Specifically, we train an expressive human body model called SUPR(Sparse Unified Part-Based Human Representation), where each joint strictlyinfluences a sparse set of model vertices. The factorized representationenables separating SUPR into an entire suite of body part models. Note that thefeet have received little attention and existing 3D body models have highlyunder-actuated feet. Using novel 4D scans of feet, we train a model with anextended kinematic tree that captures the range of motion of the toes.Additionally, feet deform due to ground contact. To model this, we include anovel non-linear deformation function that predicts foot deformationconditioned on the foot pose, shape, and ground contact. We train SUPR on anunprecedented number of scans: 1.2 million body, head, hand and foot scans. Wequantitatively compare SUPR and the separated body parts and find that oursuite of models generalizes better than existing models. SUPR is available athttp://supr.is.tue.mpg.de

基于part的人体模型

human human-part human-representation@inproceedings{SUPR, title = {SUPR: A Sparse Unified Part-Based Human Representation}, author = {Osman, Ahmed A. A. and Bolkart, Timo and Tzionas, Dimitrios and Black, Michael J.}, year = {2022}, tags = {human, human-part, human-representation}, } - FIND: An Unsupervised Implicit 3D Model of Articulated Human FeetOliver Boyne, James Charles, and Roberto CipollaIn 2022

In this paper we present a high fidelity and articulated 3D human foot model.The model is parameterised by a disentangled latent code in terms of shape,texture and articulated pose. While high fidelity models are typically createdwith strong supervision such as 3D keypoint correspondences orpre-registration, we focus on the difficult case of little to no annotation. Tothis end, we make the following contributions: (i) we develop a Foot ImplicitNeural Deformation field model, named FIND, capable of tailoring explicitmeshes at any resolution i.e. for low or high powered devices; (ii) an approachfor training our model in various modes of weak supervision with progressivelybetter disentanglement as more labels, such as pose categories, are provided;(iii) a novel unsupervised part-based loss for fitting our model to 2D imageswhich is better than traditional photometric or silhouette losses; (iv)finally, we release a new dataset of high resolution 3D human foot scans,Foot3D. On this dataset, we show our model outperforms a strong PCAimplementation trained on the same data in terms of shape quality and partcorrespondences, and that our novel unsupervised part-based loss improvesinference on images.

使用RGB来自监督的训练脚的隐式表达

human feet human-representation implicit-model@inproceedings{FIND, title = {FIND: An Unsupervised Implicit 3D Model of Articulated Human Feet}, author = {Boyne, Oliver and Charles, James and Cipolla, Roberto}, year = {2022}, tags = {human, feet, human-representation, implicit-model}, } - HDHumans: A Hybrid Approach for High-fidelity Digital HumansMarc Habermann, Lingjie Liu, Weipeng Xu, Gerard Pons-Moll, Michael Zollhoefer, and Christian TheobaltIn 2022

Photo-real digital human avatars are of enormous importance in graphics, asthey enable immersive communication over the globe, improve gaming andentertainment experiences, and can be particularly beneficial for AR and VRsettings. However, current avatar generation approaches either fall short inhigh-fidelity novel view synthesis, generalization to novel motions,reproduction of loose clothing, or they cannot render characters at the highresolution offered by modern displays. To this end, we propose HDHumans, whichis the first method for HD human character synthesis that jointly produces anaccurate and temporally coherent 3D deforming surface and highlyphoto-realistic images of arbitrary novel views and of motions not seen attraining time. At the technical core, our method tightly integrates a classicaldeforming character template with neural radiance fields (NeRF). Our method iscarefully designed to achieve a synergy between classical surface deformationand NeRF. First, the template guides the NeRF, which allows synthesizing novelviews of a highly dynamic and articulated character and even enables thesynthesis of novel motions. Second, we also leverage the dense pointcloudsresulting from NeRF to further improve the deforming surface via 3D-to-3Dsupervision. We outperform the state of the art quantitatively andqualitatively in terms of synthesis quality and resolution, as well as thequality of 3D surface reconstruction.

DeepCap的拓展

human-synthesis view-synthesis human-modeling human-performance-capture@inproceedings{HDHumans, title = {HDHumans: A Hybrid Approach for High-fidelity Digital Humans}, author = {Habermann, Marc and Liu, Lingjie and Xu, Weipeng and Pons-Moll, Gerard and Zollhoefer, Michael and Theobalt, Christian}, year = {2022}, tags = {human-synthesis, view-synthesis, human-modeling, human-performance-capture}, } - Bootstrapping Human Optical Flow and PoseAritro Roy Arko, James J. Little, and Kwang Moo YiIn 2022

We propose a bootstrapping framework to enhance human optical flow and pose.We show that, for videos involving humans in scenes, we can improve both theoptical flow and the pose estimation quality of humans by considering the twotasks at the same time. We enhance optical flow estimates by fine-tuning themto fit the human pose estimates and vice versa. In more detail, we optimize thepose and optical flow networks to, at inference time, agree with each other. Weshow that this results in state-of-the-art results on the Human 3.6M and 3DPoses in the Wild datasets, as well as a human-related subset of the Sinteldataset, both in terms of pose estimation accuracy and the optical flowaccuracy at human joint locations. Code available athttps://github.com/ubc-vision/bootstrapping-human-optical-flow-and-pose

使用pose来增强光流

human optical-flow pose-estimation@inproceedings{2210.15121, title = {Bootstrapping Human Optical Flow and Pose}, author = {Arko, Aritro Roy and Little, James J. and Yi, Kwang Moo}, year = {2022}, tags = {human, optical-flow, pose-estimation}, } - Human Body Measurement Estimation with Adversarial AugmentationNataniel Ruiz, Miriam Bellver, Timo Bolkart, Ambuj Arora, Ming C. Lin, Javier Romero, and Raja BalaIn 2022

We present a Body Measurement network (BMnet) for estimating 3Danthropomorphic measurements of the human body shape from silhouette images.Training of BMnet is performed on data from real human subjects, and augmentedwith a novel adversarial body simulator (ABS) that finds and synthesizeschallenging body shapes. ABS is based on the skinned multiperson linear (SMPL)body model, and aims to maximize BMnet measurement prediction error withrespect to latent SMPL shape parameters. ABS is fully differentiable withrespect to these parameters, and trained end-to-end via backpropagation withBMnet in the loop. Experiments show that ABS effectively discovers adversarialexamples, such as bodies with extreme body mass indices (BMI), consistent withthe rarity of extreme-BMI bodies in BMnet’s training set. Thus ABS is able toreveal gaps in training data and potential failures in predictingunder-represented body shapes. Results show that training BMnet with ABSimproves measurement prediction accuracy on real bodies by up to 10%, whencompared to no augmentation or random body shape sampling. Furthermore, ourmethod significantly outperforms SOTA measurement estimation methods by as muchas 3x. Finally, we release BodyM, the first challenging, large-scale dataset ofphoto silhouettes and body measurements of real human subjects, to furtherpromote research in this area. Project website:https://adversarialbodysim.github.io

human-shape@inproceedings{2210.05667, title = {Human Body Measurement Estimation with Adversarial Augmentation}, author = {Ruiz, Nataniel and Bellver, Miriam and Bolkart, Timo and Arora, Ambuj and Lin, Ming C. and Romero, Javier and Bala, Raja}, year = {2022}, tags = {human-shape}, } -

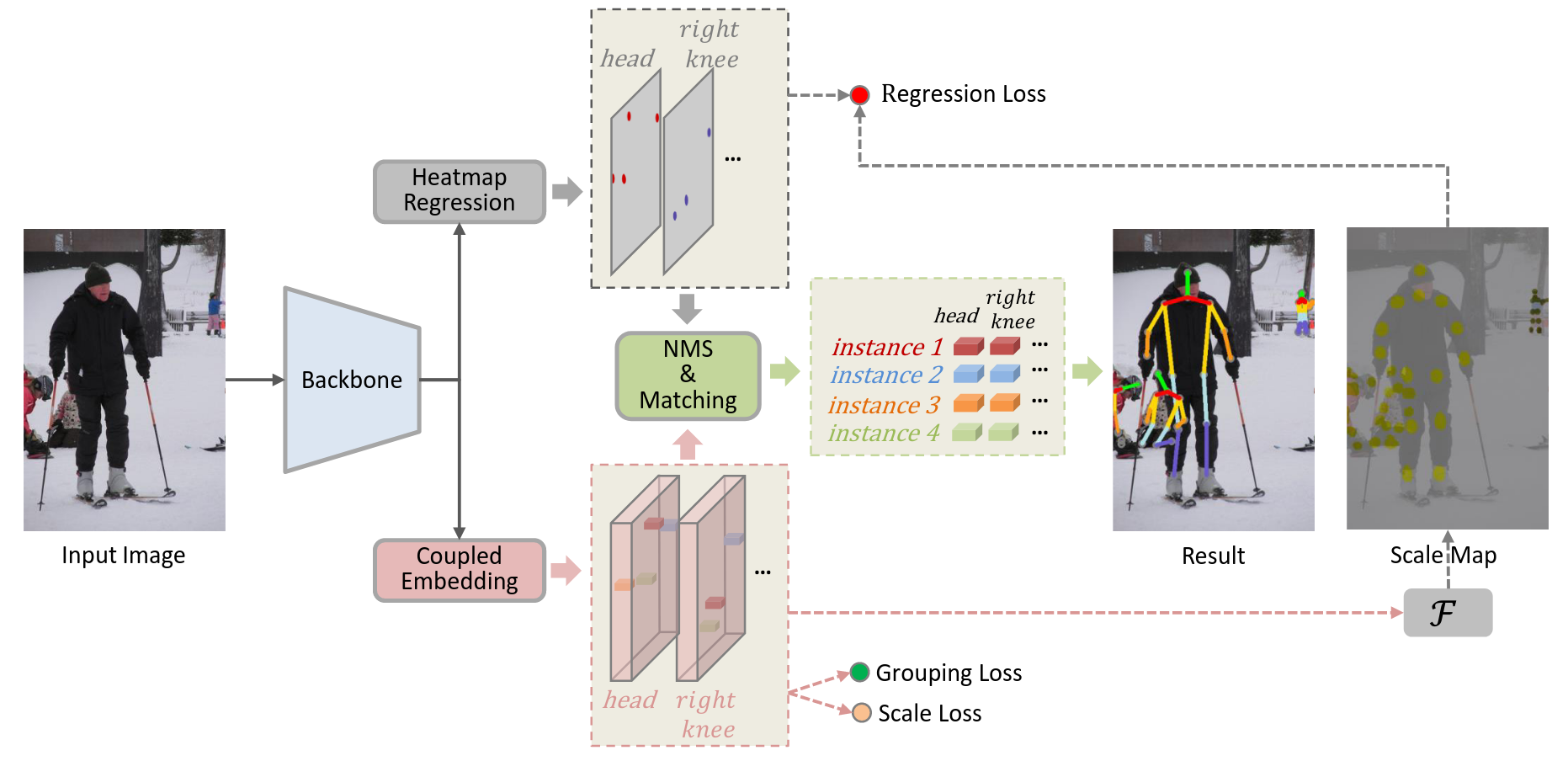

Regularizing Vector Embedding in Bottom-Up Human Pose EstimationIn ECCV 2022

Regularizing Vector Embedding in Bottom-Up Human Pose EstimationIn ECCV 2022使用scale来提升embedding

human-pose-estimation bottom-up@inproceedings{Regularizing_Vector_Embedding, title = {Regularizing Vector Embedding in Bottom-Up Human Pose Estimation}, author = {}, year = {2022}, tags = {human-pose-estimation, bottom-up}, booktitle = {ECCV}, } -

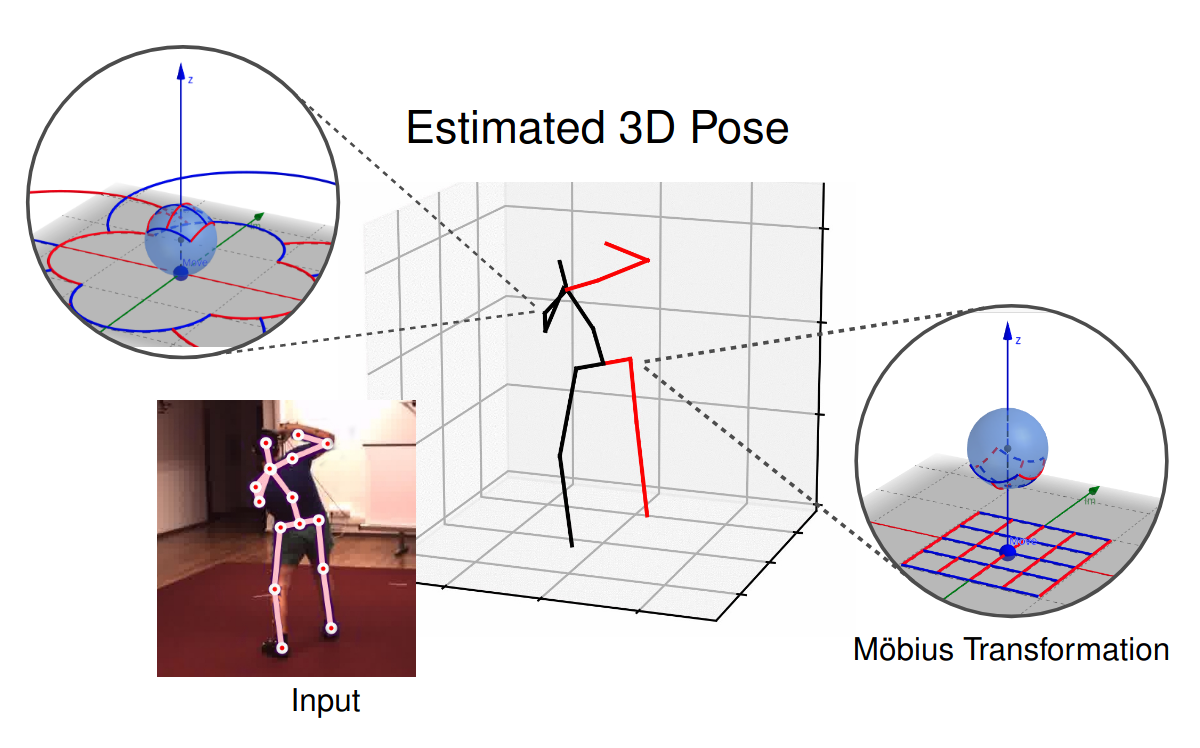

3D Human Pose Estimation Using Möbius Graph Convolutional NetworksNiloofar Azizi, Horst Possegger, Emanuele Rodolà, and Horst BischofIn ECCV 2022

3D Human Pose Estimation Using Möbius Graph Convolutional NetworksNiloofar Azizi, Horst Possegger, Emanuele Rodolà, and Horst BischofIn ECCV 20223D human pose estimation is fundamental to understanding human behavior.Recently, promising results have been achieved by graph convolutional networks(GCNs), which achieve state-of-the-art performance and provide ratherlight-weight architectures. However, a major limitation of GCNs is theirinability to encode all the transformations between joints explicitly. Toaddress this issue, we propose a novel spectral GCN using the Möbiustransformation (MöbiusGCN). In particular, this allows us to directly andexplicitly encode the transformation between joints, resulting in asignificantly more compact representation. Compared to even the lightestarchitectures so far, our novel approach requires 90-98% fewer parameters, i.e.our lightest MöbiusGCN uses only 0.042M trainable parameters. Besides thedrastic parameter reduction, explicitly encoding the transformation of jointsalso enables us to achieve state-of-the-art results. We evaluate our approachon the two challenging pose estimation benchmarks, Human3.6M and MPI-INF-3DHP,demonstrating both state-of-the-art results and the generalization capabilitiesof MöbiusGCN.

human-pose-estimation gcn@inproceedings{Mobius_GCN, title = {3D Human Pose Estimation Using Möbius Graph Convolutional Networks}, author = {Azizi, Niloofar and Possegger, Horst and Rodolà, Emanuele and Bischof, Horst}, year = {2022}, tags = {human-pose-estimation, gcn}, booktitle = {ECCV}, } -

PPT: token-Pruned Pose Transformer for monocular and multi-view human pose estimationHaoyu Ma, Zhe Wang, Yifei Chen, Deying Kong, Liangjian Chen, Xingwei Liu, Xiangyi Yan, Hao Tang, and Xiaohui XieIn 2022

PPT: token-Pruned Pose Transformer for monocular and multi-view human pose estimationHaoyu Ma, Zhe Wang, Yifei Chen, Deying Kong, Liangjian Chen, Xingwei Liu, Xiangyi Yan, Hao Tang, and Xiaohui XieIn 2022Recently, the vision transformer and its variants have played an increasinglyimportant role in both monocular and multi-view human pose estimation.Considering image patches as tokens, transformers can model the globaldependencies within the entire image or across images from other views.However, global attention is computationally expensive. As a consequence, it isdifficult to scale up these transformer-based methods to high-resolutionfeatures and many views. In this paper, we propose the token-Pruned Pose Transformer (PPT) for 2Dhuman pose estimation, which can locate a rough human mask and performsself-attention only within selected tokens. Furthermore, we extend our PPT tomulti-view human pose estimation. Built upon PPT, we propose a new cross-viewfusion strategy, called human area fusion, which considers all human foregroundpixels as corresponding candidates. Experimental results on COCO and MPIIdemonstrate that our PPT can match the accuracy of previous pose transformermethods while reducing the computation. Moreover, experiments on Human 3.6M andSki-Pose demonstrate that our Multi-view PPT can efficiently fuse cues frommultiple views and achieve new state-of-the-art results.

使用人体区域来做fusion

human mv 1p 3dpose@inproceedings{PPU, title = {PPT: token-Pruned Pose Transformer for monocular and multi-view human pose estimation}, author = {Ma, Haoyu and Wang, Zhe and Chen, Yifei and Kong, Deying and Chen, Liangjian and Liu, Xingwei and Yan, Xiangyi and Tang, Hao and Xie, Xiaohui}, year = {2022}, tags = {human, mv, 1p, 3dpose}, } -

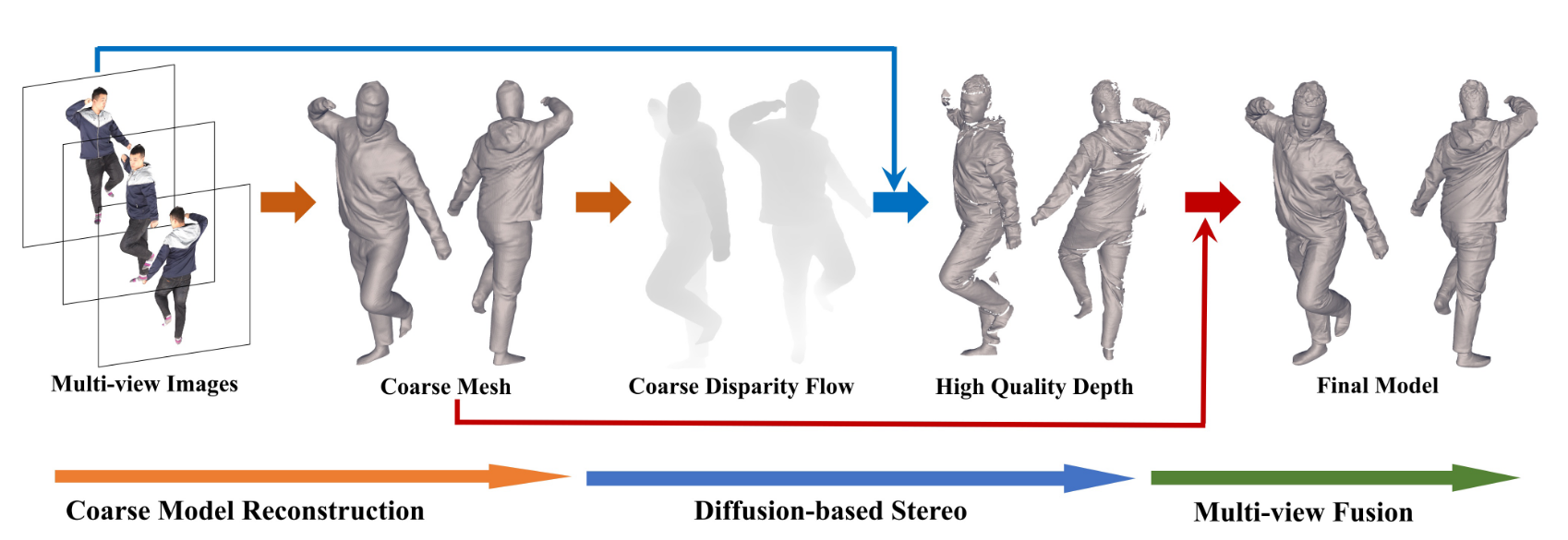

DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse CamerasRuizhi Shao, Zerong Zheng, Hongwen Zhang, Jingxiang Sun, and Yebin LiuIn ECCV 2022

DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse CamerasRuizhi Shao, Zerong Zheng, Hongwen Zhang, Jingxiang Sun, and Yebin LiuIn ECCV 2022We propose DiffuStereo, a novel system using only sparse cameras (8 in thiswork) for high-quality 3D human reconstruction. At its core is a noveldiffusion-based stereo module, which introduces diffusion models, a type ofpowerful generative models, into the iterative stereo matching network. To thisend, we design a new diffusion kernel and additional stereo constraints tofacilitate stereo matching and depth estimation in the network. We furtherpresent a multi-level stereo network architecture to handle high-resolution (upto 4k) inputs without requiring unaffordable memory footprint. Given a set ofsparse-view color images of a human, the proposed multi-level diffusion-basedstereo network can produce highly accurate depth maps, which are then convertedinto a high-quality 3D human model through an efficient multi-view fusionstrategy. Overall, our method enables automatic reconstruction of human modelswith quality on par to high-end dense-view camera rigs, and this is achievedusing a much more light-weight hardware setup. Experiments show that our methodoutperforms state-of-the-art methods by a large margin both qualitatively andquantitatively.

human-reconstruction@inproceedings{DiffuStereo, title = {DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse Cameras}, author = {Shao, Ruizhi and Zheng, Zerong and Zhang, Hongwen and Sun, Jingxiang and Liu, Yebin}, year = {2022}, tags = {human-reconstruction}, booktitle = {ECCV}, } -

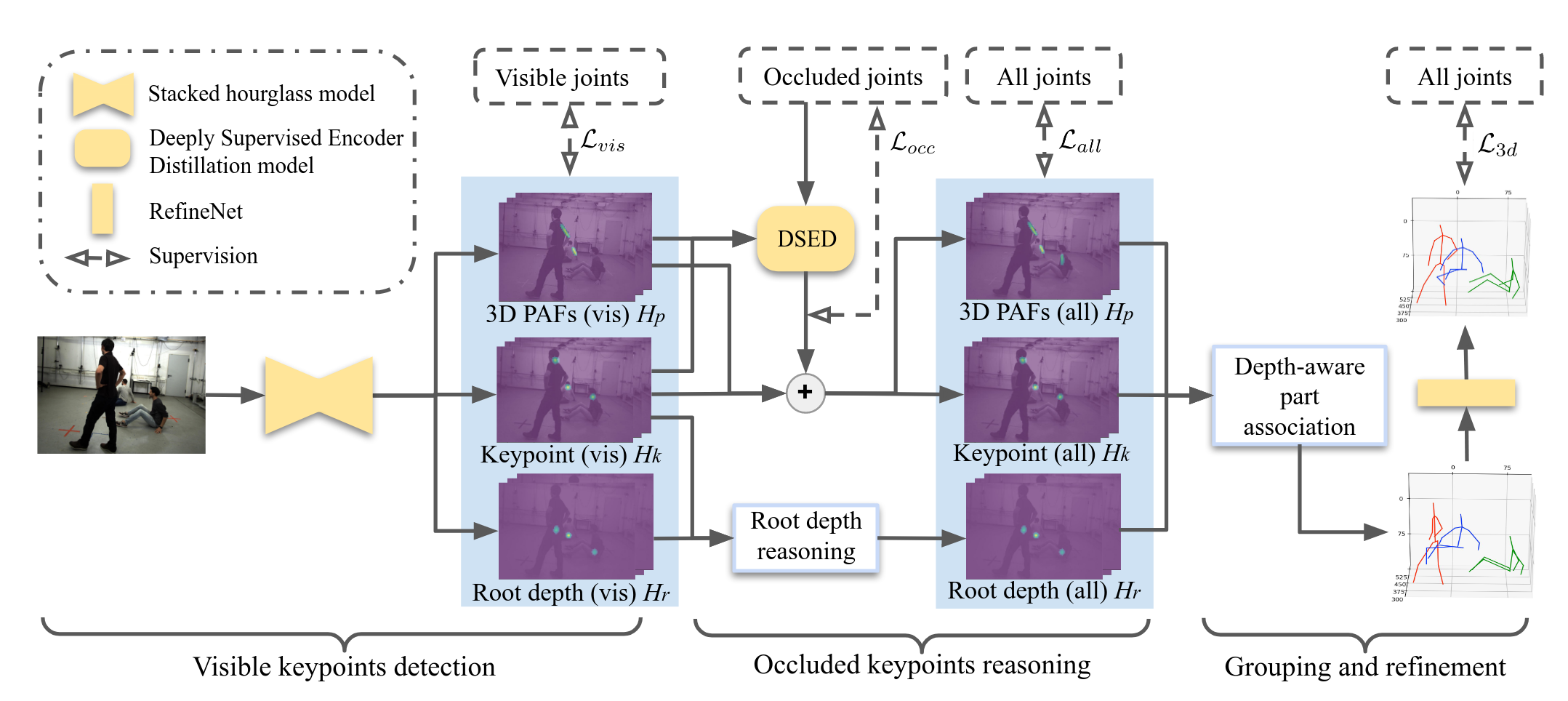

Explicit Occlusion Reasoning for Multi-person 3D Human Pose EstimationQihao Liu, Yi Zhang, Song Bai, and Alan YuilleIn ECCV 2022

Explicit Occlusion Reasoning for Multi-person 3D Human Pose EstimationQihao Liu, Yi Zhang, Song Bai, and Alan YuilleIn ECCV 2022Occlusion poses a great threat to monocular multi-person 3D human poseestimation due to large variability in terms of the shape, appearance, andposition of occluders. While existing methods try to handle occlusion with posepriors/constraints, data augmentation, or implicit reasoning, they still failto generalize to unseen poses or occlusion cases and may make large mistakeswhen multiple people are present. Inspired by the remarkable ability of humansto infer occluded joints from visible cues, we develop a method to explicitlymodel this process that significantly improves bottom-up multi-person humanpose estimation with or without occlusions. First, we split the task into twosubtasks: visible keypoints detection and occluded keypoints reasoning, andpropose a Deeply Supervised Encoder Distillation (DSED) network to solve thesecond one. To train our model, we propose a Skeleton-guided human ShapeFitting (SSF) approach to generate pseudo occlusion labels on the existingdatasets, enabling explicit occlusion reasoning. Experiments show thatexplicitly learning from occlusions improves human pose estimation. Inaddition, exploiting feature-level information of visible joints allows us toreason about occluded joints more accurately. Our method outperforms both thestate-of-the-art top-down and bottom-up methods on several benchmarks.

估计被遮挡住的数据然后再进行association

human-pose-estimation 1vmp@inproceedings{Occulusion_reasoning, title = {Explicit Occlusion Reasoning for Multi-person 3D Human Pose Estimation}, author = {Liu, Qihao and Zhang, Yi and Bai, Song and Yuille, Alan}, year = {2022}, tags = {human-pose-estimation, 1vmp}, booktitle = {ECCV}, }

- SUPR: A Sparse Unified Part-Based Human RepresentationAhmed A. A. Osman, Timo Bolkart, Dimitrios Tzionas, and Michael J. BlackIn 2022

Statistical 3D shape models of the head, hands, and fullbody are widely usedin computer vision and graphics. Despite their wide use, we show that existingmodels of the head and hands fail to capture the full range of motion for theseparts. Moreover, existing work largely ignores the feet, which are crucial formodeling human movement and have applications in biomechanics, animation, andthe footwear industry. The problem is that previous body part models aretrained using 3D scans that are isolated to the individual parts. Such datadoes not capture the full range of motion for such parts, e.g. the motion ofhead relative to the neck. Our observation is that full-body scans provideimportant information about the motion of the body parts. Consequently, wepropose a new learning scheme that jointly trains a full-body model andspecific part models using a federated dataset of full-body and body-partscans. Specifically, we train an expressive human body model called SUPR(Sparse Unified Part-Based Human Representation), where each joint strictlyinfluences a sparse set of model vertices. The factorized representationenables separating SUPR into an entire suite of body part models. Note that thefeet have received little attention and existing 3D body models have highlyunder-actuated feet. Using novel 4D scans of feet, we train a model with anextended kinematic tree that captures the range of motion of the toes.Additionally, feet deform due to ground contact. To model this, we include anovel non-linear deformation function that predicts foot deformationconditioned on the foot pose, shape, and ground contact. We train SUPR on anunprecedented number of scans: 1.2 million body, head, hand and foot scans. Wequantitatively compare SUPR and the separated body parts and find that oursuite of models generalizes better than existing models. SUPR is available athttp://supr.is.tue.mpg.de

基于part的人体模型

human human-part human-representation@inproceedings{SUPR, title = {SUPR: A Sparse Unified Part-Based Human Representation}, author = {Osman, Ahmed A. A. and Bolkart, Timo and Tzionas, Dimitrios and Black, Michael J.}, year = {2022}, tags = {human, human-part, human-representation}, }

2021

- Learning Temporal 3D Human Pose Estimation with Pseudo-LabelsArij Bouazizi, Ulrich Kressel, and Vasileios BelagiannisIn 2021

We present a simple, yet effective, approach for self-supervised 3D humanpose estimation. Unlike the prior work, we explore the temporal informationnext to the multi-view self-supervision. During training, we rely ontriangulating 2D body pose estimates of a multiple-view camera system. Atemporal convolutional neural network is trained with the generated 3Dground-truth and the geometric multi-view consistency loss, imposinggeometrical constraints on the predicted 3D body skeleton. During inference,our model receives a sequence of 2D body pose estimates from a single-view topredict the 3D body pose for each of them. An extensive evaluation shows thatour method achieves state-of-the-art performance in the Human3.6M andMPI-INF-3DHP benchmarks. Our code and models are publicly available at\urlhttps://github.com/vru2020/TM_HPE/.

输入一段序列的2D关键点,输出3Dpose,通过多视角的一致性来监督

human monocular 1p 3dpose self-supervised@inproceedings{temporal3d, title = {Learning Temporal 3D Human Pose Estimation with Pseudo-Labels}, author = {Bouazizi, Arij and Kressel, Ulrich and Belagiannis, Vasileios}, year = {2021}, tags = {human, monocular, 1p, 3dpose, self-supervised}, } - Direct Multi-view Multi-person 3D Pose EstimationTao Wang, Jianfeng Zhang, Yujun Cai, Shuicheng Yan, and Jiashi FengIn 2021

We present Multi-view Pose transformer (MvP) for estimating multi-person 3Dposes from multi-view images. Instead of estimating 3D joint locations fromcostly volumetric representation or reconstructing the per-person 3D pose frommultiple detected 2D poses as in previous methods, MvP directly regresses themulti-person 3D poses in a clean and efficient way, without relying onintermediate tasks. Specifically, MvP represents skeleton joints as learnablequery embeddings and let them progressively attend to and reason over themulti-view information from the input images to directly regress the actual 3Djoint locations. To improve the accuracy of such a simple pipeline, MvPpresents a hierarchical scheme to concisely represent query embeddings ofmulti-person skeleton joints and introduces an input-dependent query adaptationapproach. Further, MvP designs a novel geometrically guided attentionmechanism, called projective attention, to more precisely fuse the cross-viewinformation for each joint. MvP also introduces a RayConv operation tointegrate the view-dependent camera geometry into the feature representationsfor augmenting the projective attention. We show experimentally that our MvPmodel outperforms the state-of-the-art methods on several benchmarks whilebeing much more efficient. Notably, it achieves 92.3% AP25 on the challengingPanoptic dataset, improving upon the previous best approach [36] by 9.8%. MvPis general and also extendable to recovering human mesh represented by the SMPLmodel, thus useful for modeling multi-person body shapes. Code and models areavailable at https://github.com/sail-sg/mvp.

多视角的feature直接通过transformer聚合

human mv mp 3dpose transformer@inproceedings{DMVMP, title = {Direct Multi-view Multi-person 3D Pose Estimation}, author = {Wang, Tao and Zhang, Jianfeng and Cai, Yujun and Yan, Shuicheng and Feng, Jiashi}, year = {2021}, tags = {human, mv, mp, 3dpose, transformer}, } - Generalizable Human Pose TriangulationKristijan Bartol, David Bojanić, Tomislav Petković, and Tomislav PribanićIn 2021

We address the problem of generalizability for multi-view 3D human poseestimation. The standard approach is to first detect 2D keypoints in images andthen apply triangulation from multiple views. Even though the existing methodsachieve remarkably accurate 3D pose estimation on public benchmarks, most ofthem are limited to a single spatial camera arrangement and their number.Several methods address this limitation but demonstrate significantly degradedperformance on novel views. We propose a stochastic framework for human posetriangulation and demonstrate a superior generalization across different cameraarrangements on two public datasets. In addition, we apply the same approach tothe fundamental matrix estimation problem, showing that the proposed method cansuccessfully apply to other computer vision problems. The stochastic frameworkachieves more than 8.8% improvement on the 3D pose estimation task, compared tothe state-of-the-art, and more than 30% improvement for fundamental matrixestimation, compared to a standard algorithm.

提出了一个框架来解决泛化的三角化问题

human mv 1p 3dpose@inproceedings{GeneralTri, title = {Generalizable Human Pose Triangulation}, author = {Bartol, Kristijan and Bojanić, David and Petković, Tomislav and Pribanić, Tomislav}, year = {2021}, tags = {human, mv, 1p, 3dpose}, } - Adaptive Multi-view and Temporal Fusing Transformer for 3D Human Pose EstimationHui Shuai, Lele Wu, and Qingshan LiuIn 2021

This paper proposes a unified framework dubbed Multi-view and Temporal FusingTransformer (MTF-Transformer) to adaptively handle varying view numbers andvideo length without camera calibration in 3D Human Pose Estimation (HPE). Itconsists of Feature Extractor, Multi-view Fusing Transformer (MFT), andTemporal Fusing Transformer (TFT). Feature Extractor estimates 2D pose fromeach image and fuses the prediction according to the confidence. It providespose-focused feature embedding and makes subsequent modules computationallylightweight. MFT fuses the features of a varying number of views with a novelRelative-Attention block. It adaptively measures the implicit relativerelationship between each pair of views and reconstructs more informativefeatures. TFT aggregates the features of the whole sequence and predicts 3Dpose via a transformer. It adaptively deals with the video of arbitrary lengthand fully unitizes the temporal information. The migration of transformersenables our model to learn spatial geometry better and preserve robustness forvarying application scenarios. We report quantitative and qualitative resultson the Human3.6M, TotalCapture, and KTH Multiview Football II. Compared withstate-of-the-art methods with camera parameters, MTF-Transformer obtainscompetitive results and generalizes well to dynamic capture with an arbitrarynumber of unseen views.

多视角特征融合的transformer以及时序融合的transformer

human mv 1p 3dpose transformer@inproceedings{2110.05092, title = {Adaptive Multi-view and Temporal Fusing Transformer for 3D Human Pose Estimation}, author = {Shuai, Hui and Wu, Lele and Liu, Qingshan}, year = {2021}, tags = {human, mv, 1p, 3dpose, transformer}, } - Semi-supervised Dense Keypointsusing Unlabeled Multiview ImagesZhixuan Yu, Haozheng Yu, Long Sha, Sujoy Ganguly, and Hyun Soo ParkIn 2021

This paper presents a new end-to-end semi-supervised framework to learn adense keypoint detector using unlabeled multiview images. A key challenge liesin finding the exact correspondences between the dense keypoints in multipleviews since the inverse of keypoint mapping can be neither analytically derivednor differentiated. This limits applying existing multiview supervisionapproaches on sparse keypoint detection that rely on the exact correspondences.To address this challenge, we derive a new probabilistic epipolar constraintthat encodes the two desired properties. (1) Soft correspondence: we define amatchability, which measures a likelihood of a point matching to the otherimage’s corresponding point, thus relaxing the exact correspondences’requirement. (2) Geometric consistency: every point in the continuouscorrespondence fields must satisfy the multiview consistency collectively. Weformulate a probabilistic epipolar constraint using a weighted average ofepipolar errors through the matchability thereby generalizing thepoint-to-point geometric error to the field-to-field geometric error. Thisgeneralization facilitates learning a geometrically coherent dense keypointdetection model by utilizing a large number of unlabeled multiview images.Additionally, to prevent degenerative cases, we employ a distillation-basedregularization by using a pretrained model. Finally, we design a new neuralnetwork architecture, made of twin networks, that effectively minimizes theprobabilistic epipolar errors of all possible correspondences between two viewimages by building affinity matrices. Our method shows superior performancecompared to existing methods, including non-differentiable bootstrapping interms of keypoint accuracy, multiview consistency, and 3D reconstructionaccuracy.

提出了软的对应关系通过几何一致性来约束

human mv 1p densepose semi-supervised@inproceedings{semidense, title = {Semi-supervised Dense Keypointsusing Unlabeled Multiview Images}, author = {Yu, Zhixuan and Yu, Haozheng and Sha, Long and Ganguly, Sujoy and Park, Hyun Soo}, year = {2021}, tags = {human, mv, 1p, densepose, semi-supervised}, } - Graph-Based 3D Multi-Person Pose Estimation Using Multi-View ImagesSize Wu, Sheng Jin, Wentao Liu, Lei Bai, Chen Qian, Dong Liu, and Wanli OuyangIn 2021

This paper studies the task of estimating the 3D human poses of multiplepersons from multiple calibrated camera views. Following the top-down paradigm,we decompose the task into two stages, i.e. person localization and poseestimation. Both stages are processed in coarse-to-fine manners. And we proposethree task-specific graph neural networks for effective message passing. For 3Dperson localization, we first use Multi-view Matching Graph Module (MMG) tolearn the cross-view association and recover coarse human proposals. The CenterRefinement Graph Module (CRG) further refines the results via flexiblepoint-based prediction. For 3D pose estimation, the Pose Regression GraphModule (PRG) learns both the multi-view geometry and structural relationsbetween human joints. Our approach achieves state-of-the-art performance on CMUPanoptic and Shelf datasets with significantly lower computation complexity.

通过图网络来学习多人对应关系

human mv mp 3dpose GNN@inproceedings{graphmp, title = {Graph-Based 3D Multi-Person Pose Estimation Using Multi-View Images}, author = {Wu, Size and Jin, Sheng and Liu, Wentao and Bai, Lei and Qian, Chen and Liu, Dong and Ouyang, Wanli}, year = {2021}, tags = {human, mv, mp, 3dpose, GNN}, } -

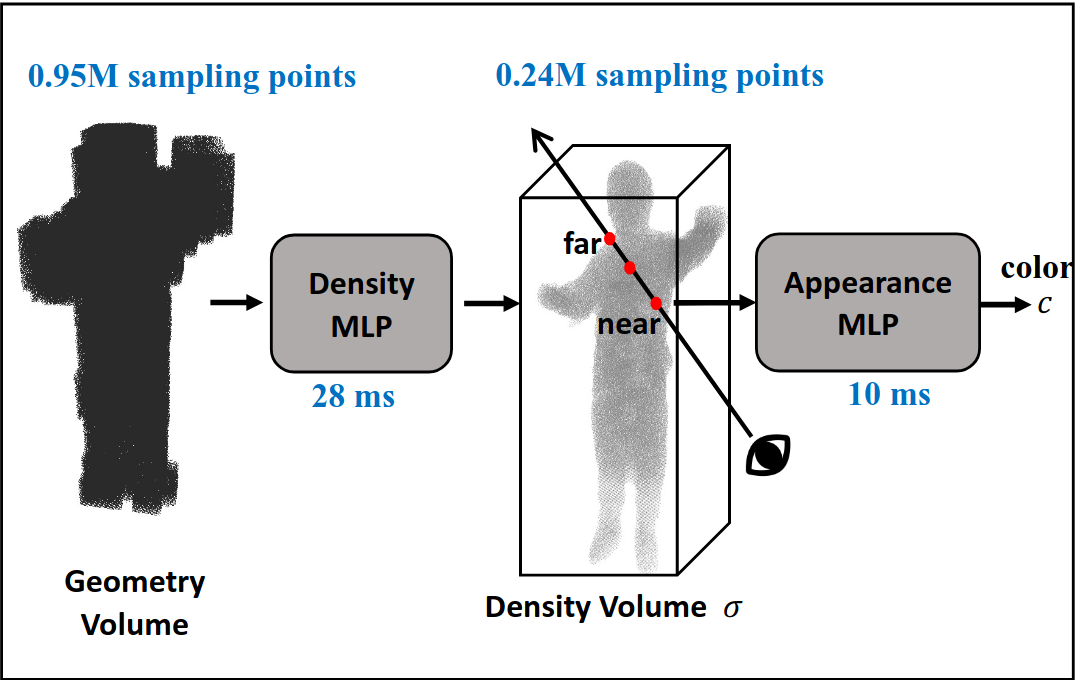

Geometry-Guided Progressive NeRF for Generalizable and Efficient Neural Human RenderingMingfei Chen, Jianfeng Zhang, Xiangyu Xu, Lijuan Liu, Yujun Cai, Jiashi Feng, and Shuicheng YanIn ECCV 2021

Geometry-Guided Progressive NeRF for Generalizable and Efficient Neural Human RenderingMingfei Chen, Jianfeng Zhang, Xiangyu Xu, Lijuan Liu, Yujun Cai, Jiashi Feng, and Shuicheng YanIn ECCV 2021In this work we develop a generalizable and efficient Neural Radiance Field(NeRF) pipeline for high-fidelity free-viewpoint human body synthesis undersettings with sparse camera views. Though existing NeRF-based methods cansynthesize rather realistic details for human body, they tend to produce poorresults when the input has self-occlusion, especially for unseen humans undersparse views. Moreover, these methods often require a large number of samplingpoints for rendering, which leads to low efficiency and limits their real-worldapplicability. To address these challenges, we propose a Geometry-guidedProgressive NeRF (GP-NeRF). In particular, to better tackle self-occlusion, wedevise a geometry-guided multi-view feature integration approach that utilizesthe estimated geometry prior to integrate the incomplete information from inputviews and construct a complete geometry volume for the target human body.Meanwhile, for achieving higher rendering efficiency, we introduce aprogressive rendering pipeline through geometry guidance, which leverages thegeometric feature volume and the predicted density values to progressivelyreduce the number of sampling points and speed up the rendering process.Experiments on the ZJU-MoCap and THUman datasets show that our methodoutperforms the state-of-the-arts significantly across multiple generalizationsettings, while the time cost is reduced > 70% via applying our efficientprogressive rendering pipeline.

Geometry-guided image feature integration获得density volume,减少采样的点的数量

human-nerf accelerate@inproceedings{GGNerf, title = {Geometry-Guided Progressive NeRF for Generalizable and Efficient Neural Human Rendering}, author = {Chen, Mingfei and Zhang, Jianfeng and Xu, Xiangyu and Liu, Lijuan and Cai, Yujun and Feng, Jiashi and Yan, Shuicheng}, year = {2021}, tags = {human-nerf, accelerate}, booktitle = {ECCV}, } -

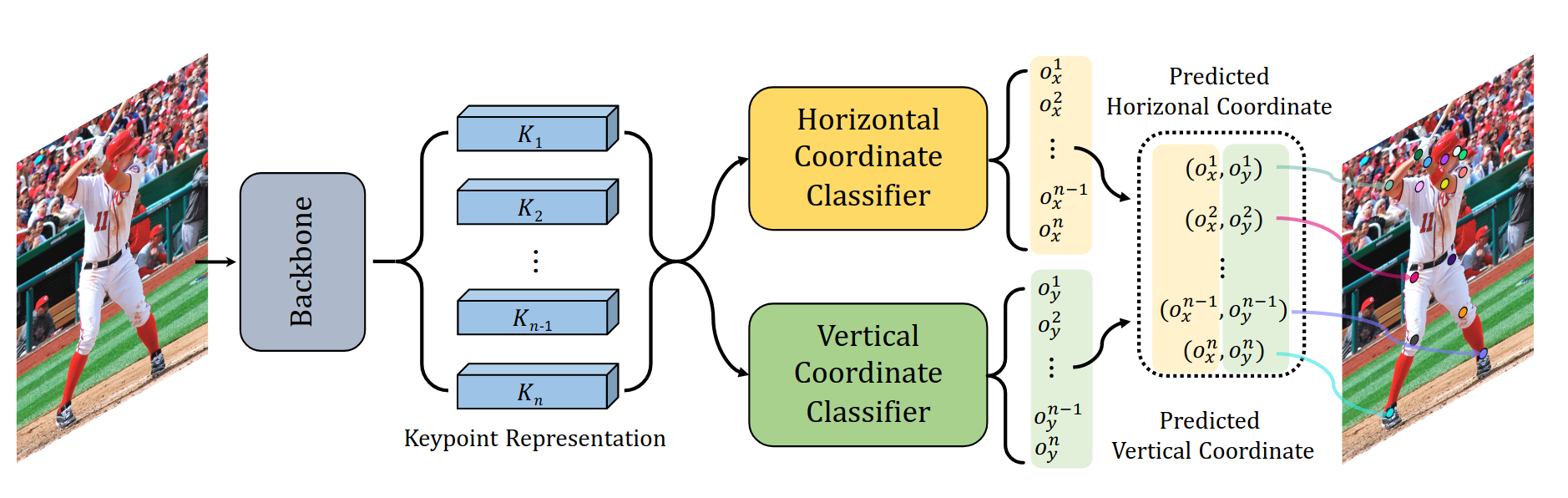

SimCC: a Simple Coordinate Classification Perspective for Human Pose EstimationYanjie Li, Sen Yang, Peidong Liu, Shoukui Zhang, Yunxiao Wang, Zhicheng Wang, Wankou Yang, and Shu-Tao XiaIn ECCV 2021

SimCC: a Simple Coordinate Classification Perspective for Human Pose EstimationYanjie Li, Sen Yang, Peidong Liu, Shoukui Zhang, Yunxiao Wang, Zhicheng Wang, Wankou Yang, and Shu-Tao XiaIn ECCV 2021The 2D heatmap-based approaches have dominated Human Pose Estimation (HPE)for years due to high performance. However, the long-standing quantizationerror problem in the 2D heatmap-based methods leads to several well-knowndrawbacks: 1) The performance for the low-resolution inputs is limited; 2) Toimprove the feature map resolution for higher localization precision, multiplecostly upsampling layers are required; 3) Extra post-processing is adopted toreduce the quantization error. To address these issues, we aim to explore abrand new scheme, called \textitSimCC, which reformulates HPE as twoclassification tasks for horizontal and vertical coordinates. The proposedSimCC uniformly divides each pixel into several bins, thus achieving\emphsub-pixel localization precision and low quantization error. Benefitingfrom that, SimCC can omit additional refinement post-processing and excludeupsampling layers under certain settings, resulting in a more simple andeffective pipeline for HPE. Extensive experiments conducted over COCO,CrowdPose, and MPII datasets show that SimCC outperforms heatmap-basedcounterparts, especially in low-resolution settings by a large margin.

从坐标分类的角度来看2D人体姿态估计问题

human-pose-estimation@inproceedings{SimCC, title = {SimCC: a Simple Coordinate Classification Perspective for Human Pose Estimation}, author = {Li, Yanjie and Yang, Sen and Liu, Peidong and Zhang, Shoukui and Wang, Yunxiao and Wang, Zhicheng and Yang, Wankou and Xia, Shu-Tao}, year = {2021}, tags = {human-pose-estimation}, booktitle = {ECCV}, }

2020

- Rethinking the Heatmap Regression for Bottom-up Human Pose EstimationZhengxiong Luo, Zhicheng Wang, Yan Huang, Tieniu Tan, and Erjin ZhouIn 2020

Heatmap regression has become the most prevalent choice for nowadays humanpose estimation methods. The ground-truth heatmaps are usually constructed viacovering all skeletal keypoints by 2D gaussian kernels. The standard deviationsof these kernels are fixed. However, for bottom-up methods, which need tohandle a large variance of human scales and labeling ambiguities, the currentpractice seems unreasonable. To better cope with these problems, we propose thescale-adaptive heatmap regression (SAHR) method, which can adaptively adjustthe standard deviation for each keypoint. In this way, SAHR is more tolerant ofvarious human scales and labeling ambiguities. However, SAHR may aggravate theimbalance between fore-background samples, which potentially hurts theimprovement of SAHR. Thus, we further introduce the weight-adaptive heatmapregression (WAHR) to help balance the fore-background samples. Extensiveexperiments show that SAHR together with WAHR largely improves the accuracy ofbottom-up human pose estimation. As a result, we finally outperform thestate-of-the-art model by +1.5AP and achieve 72.0AP on COCO test-dev2017, whichis com-arable with the performances of most top-down methods. Source codes areavailable at https://github.com/greatlog/SWAHR-HumanPose.

均衡不同距离的heatmap的高斯核大小

human image 2dpose@inproceedings{2012.15175, title = {Rethinking the Heatmap Regression for Bottom-up Human Pose Estimation}, author = {Luo, Zhengxiong and Wang, Zhicheng and Huang, Yan and Tan, Tieniu and Zhou, Erjin}, year = {2020}, tags = {human, image, 2dpose}, } - AdaFuse: Adaptive Multiview Fusion for Accurate Human Pose Estimation in the WildZhe Zhang, Chunyu Wang, Weichao Qiu, Wenhu Qin, and Wenjun ZengIn 2020

Occlusion is probably the biggest challenge for human pose estimation in thewild. Typical solutions often rely on intrusive sensors such as IMUs to detectoccluded joints. To make the task truly unconstrained, we present AdaFuse, anadaptive multiview fusion method, which can enhance the features in occludedviews by leveraging those in visible views. The core of AdaFuse is to determinethe point-point correspondence between two views which we solve effectively byexploring the sparsity of the heatmap representation. We also learn an adaptivefusion weight for each camera view to reflect its feature quality in order toreduce the chance that good features are undesirably corrupted by “bad”views. The fusion model is trained end-to-end with the pose estimation network,and can be directly applied to new camera configurations without additionaladaptation. We extensively evaluate the approach on three public datasetsincluding Human3.6M, Total Capture and CMU Panoptic. It outperforms thestate-of-the-arts on all of them. We also create a large scale syntheticdataset Occlusion-Person, which allows us to perform numerical evaluation onthe occluded joints, as it provides occlusion labels for every joint in theimages. The dataset and code are released athttps://github.com/zhezh/adafuse-3d-human-pose.

同时输入多视角的图像关键点估计heatmap,输出2D关键点

human mv 1p 3dpose@inproceedings{AdaFuse, title = {AdaFuse: Adaptive Multiview Fusion for Accurate Human Pose Estimation in the Wild}, author = {Zhang, Zhe and Wang, Chunyu and Qiu, Weichao and Qin, Wenhu and Zeng, Wenjun}, year = {2020}, tags = {human, mv, 1p, 3dpose}, }

2019

- Learnable Triangulation of Human PoseKarim Iskakov, Egor Burkov, Victor Lempitsky, and Yury MalkovIn 2019

We present two novel solutions for multi-view 3D human pose estimation basedon new learnable triangulation methods that combine 3D information frommultiple 2D views. The first (baseline) solution is a basic differentiablealgebraic triangulation with an addition of confidence weights estimated fromthe input images. The second solution is based on a novel method of volumetricaggregation from intermediate 2D backbone feature maps. The aggregated volumeis then refined via 3D convolutions that produce final 3D joint heatmaps andallow modelling a human pose prior. Crucially, both approaches are end-to-enddifferentiable, which allows us to directly optimize the target metric. Wedemonstrate transferability of the solutions across datasets and considerablyimprove the multi-view state of the art on the Human3.6M dataset. Videodemonstration, annotations and additional materials will be posted on ourproject page (https://saic-violet.github.io/learnable-triangulation).

多个视角的特征反投影到3D空间中通过3D网络获得最终输出

human mv 1p 3dpose e2e@inproceedings{lrtri, title = {Learnable Triangulation of Human Pose}, author = {Iskakov, Karim and Burkov, Egor and Lempitsky, Victor and Malkov, Yury}, year = {2019}, tags = {human, mv, 1p, 3dpose, e2e}, } - Cross View Fusion for 3D Human Pose EstimationHaibo Qiu, Chunyu Wang, Jingdong Wang, Naiyan Wang, and Wenjun ZengIn 2019

We present an approach to recover absolute 3D human poses from multi-viewimages by incorporating multi-view geometric priors in our model. It consistsof two separate steps: (1) estimating the 2D poses in multi-view images and (2)recovering the 3D poses from the multi-view 2D poses. First, we introduce across-view fusion scheme into CNN to jointly estimate 2D poses for multipleviews. Consequently, the 2D pose estimation for each view already benefits fromother views. Second, we present a recursive Pictorial Structure Model torecover the 3D pose from the multi-view 2D poses. It gradually improves theaccuracy of 3D pose with affordable computational cost. We test our method ontwo public datasets H36M and Total Capture. The Mean Per Joint Position Errorson the two datasets are 26mm and 29mm, which outperforms the state-of-the-artsremarkably (26mm vs 52mm, 29mm vs 35mm). Our code is released at\urlhttps://github.com/microsoft/multiview-human-pose-estimation-pytorch.

直接多视角的融合

human mv 1p 3dpose@inproceedings{crossviewfusion, title = {Cross View Fusion for 3D Human Pose Estimation}, author = {Qiu, Haibo and Wang, Chunyu and Wang, Jingdong and Wang, Naiyan and Zeng, Wenjun}, year = {2019}, tags = {human, mv, 1p, 3dpose}, }

2018

- Self-supervised Multi-view Person Association and Its ApplicationsMinh Vo, Ersin Yumer, Kalyan Sunkavalli, Sunil Hadap, Yaser Sheikh, and Srinivasa NarasimhanIn 2018

Reliable markerless motion tracking of people participating in a complexgroup activity from multiple moving cameras is challenging due to frequentocclusions, strong viewpoint and appearance variations, and asynchronous videostreams. To solve this problem, reliable association of the same person acrossdistant viewpoints and temporal instances is essential. We present aself-supervised framework to adapt a generic person appearance descriptor tothe unlabeled videos by exploiting motion tracking, mutual exclusionconstraints, and multi-view geometry. The adapted discriminative descriptor isused in a tracking-by-clustering formulation. We validate the effectiveness ofour descriptor learning on WILDTRACK [14] and three new complex social scenescaptured by multiple cameras with up to 60 people "in the wild". We reportsignificant improvement in association accuracy (up to 18%) and stable andcoherent 3D human skeleton tracking (5 to 10 times) over the baseline. Usingthe reconstructed 3D skeletons, we cut the input videos into a multi-anglevideo where the image of a specified person is shown from the best visiblefront-facing camera. Our algorithm detects inter-human occlusion to determinethe camera switching moment while still maintaining the flow of the actionwell.

自监督的特征学习进行人体的聚类

human mv mp 3dpose self-supervised@inproceedings{MVPAssociation, title = {Self-supervised Multi-view Person Association and Its Applications}, author = {Vo, Minh and Yumer, Ersin and Sunkavalli, Kalyan and Hadap, Sunil and Sheikh, Yaser and Narasimhan, Srinivasa}, year = {2018}, tags = {human, mv, mp, 3dpose, self-supervised}, }

2017

- Harvesting Multiple Views for Marker-less 3D Human Pose AnnotationsGeorgios Pavlakos, Xiaowei Zhou, Konstantinos G. Derpanis, and Kostas DaniilidisIn 2017

Recent advances with Convolutional Networks (ConvNets) have shifted thebottleneck for many computer vision tasks to annotated data collection. In thispaper, we present a geometry-driven approach to automatically collectannotations for human pose prediction tasks. Starting from a generic ConvNetfor 2D human pose, and assuming a multi-view setup, we describe an automaticway to collect accurate 3D human pose annotations. We capitalize on constraintsoffered by the 3D geometry of the camera setup and the 3D structure of thehuman body to probabilistically combine per view 2D ConvNet predictions into aglobally optimal 3D pose. This 3D pose is used as the basis for harvestingannotations. The benefit of the annotations produced automatically with ourapproach is demonstrated in two challenging settings: (i) fine-tuning a genericConvNet-based 2D pose predictor to capture the discriminative aspects of asubject’s appearance (i.e.,"personalization"), and (ii) training a ConvNet fromscratch for single view 3D human pose prediction without leveraging 3D posegroundtruth. The proposed multi-view pose estimator achieves state-of-the-artresults on standard benchmarks, demonstrating the effectiveness of our methodin exploiting the available multi-view information.

多视角的heatmap生成了3D特征,然后使用3Dpictorial获取骨架位置

human mv 1p 3dpose@inproceedings{harvesting, title = {Harvesting Multiple Views for Marker-less 3D Human Pose Annotations}, author = {Pavlakos, Georgios and Zhou, Xiaowei and Derpanis, Konstantinos G. and Daniilidis, Kostas}, year = {2017}, tags = {human, mv, 1p, 3dpose}, }