EasyMoCap is an open-source toolbox designed for markerless human motion capture from RGB videos. This project offers a wide range of motion capture methods across various settings.

Core features

MoCap Anywhere

We provided the first open-source and practical method to recover challenging human motion from a small number of calibrated cameras.

Video are captured outdoors using 9 smartphones. Compared to marker-based motion capture conducted in a studio, our method does not require additional equipment and can be performed in outdoor settings.

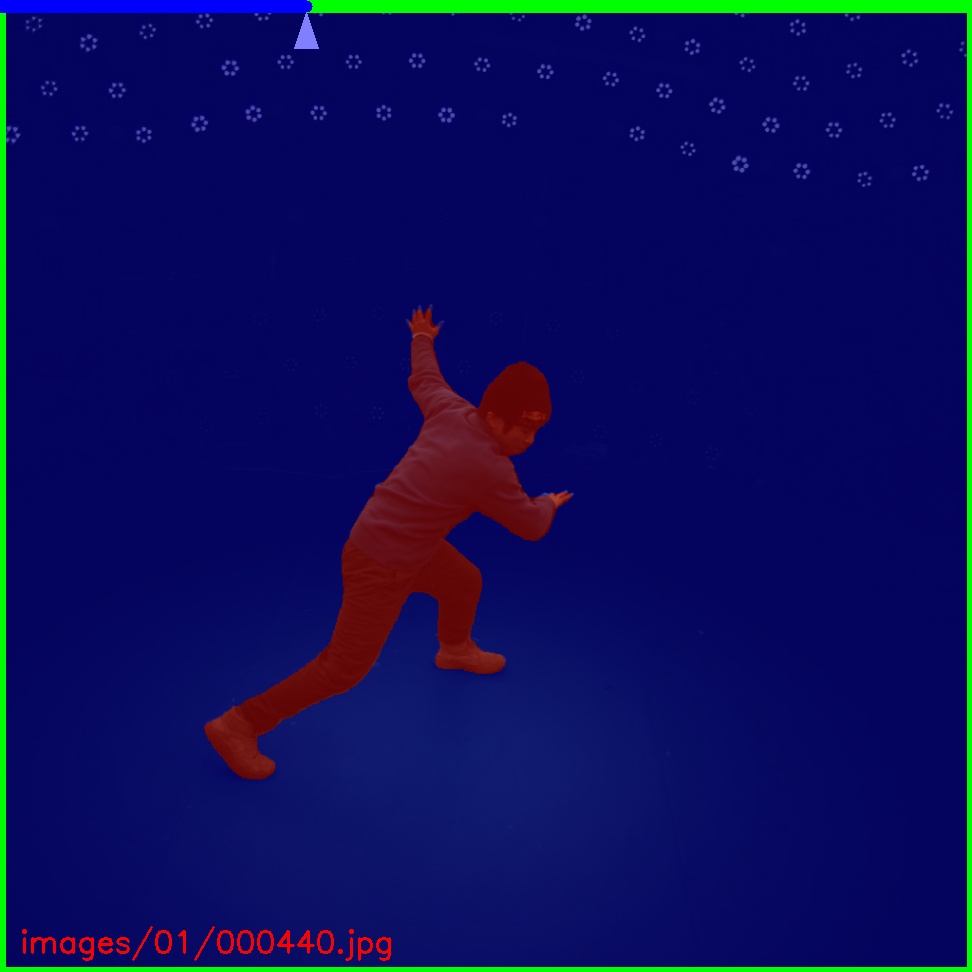

Internet video

We also provide a more general motion capture solution for internet videos. Our method excels at accurately estimating human motion with robustness.

Internet video with a mirror

Multiple Internet videos with a specific action (Coming soon)

Internet videos of Roger Federer's serving

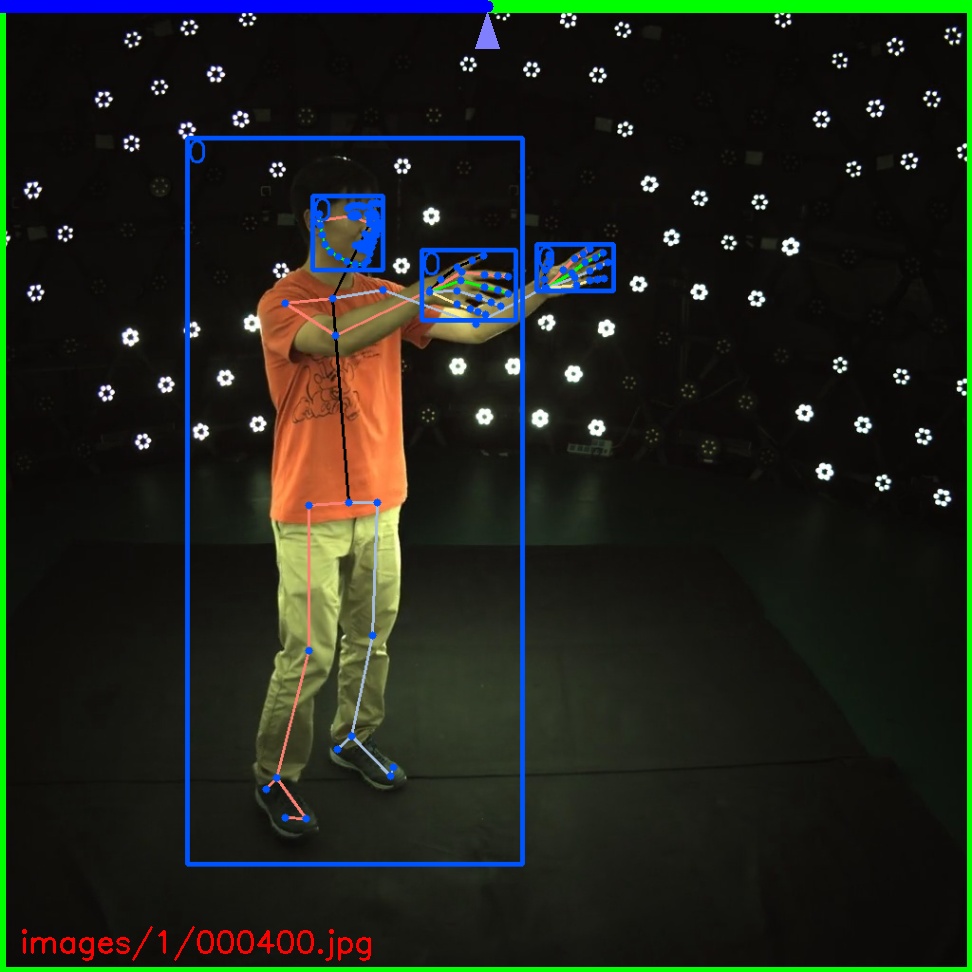

Multiple views of multiple people

Captured with 8 consumer cameras

Novel view synthesis from sparse views

Novel view synthesis for human interaction

ZJU-MoCap

With our proposed method, we release two large dataset of human motion: LightStage and Mirrored-Human. See the website for more details.

If you would like to download the ZJU-Mocap dataset, please sign the agreement, and email it to Qing Shuai (s_q@zju.edu.cn) and cc Xiaowei Zhou (xwzhou@zju.edu.cn) to request the download link.

LightStage: captured with LightStage system

Mirrored-Human: collected from the Internet

Other features

3D Realtime visualization

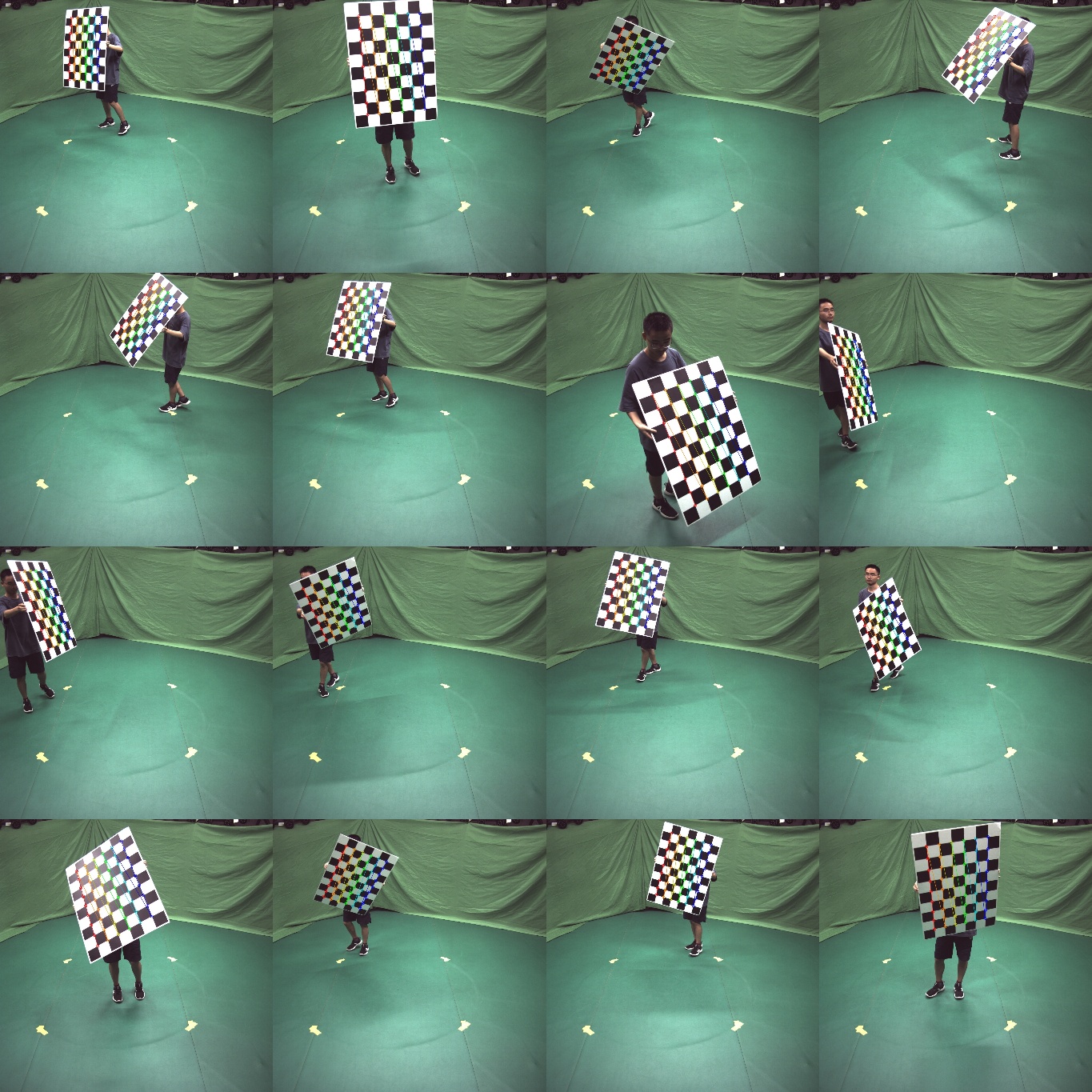

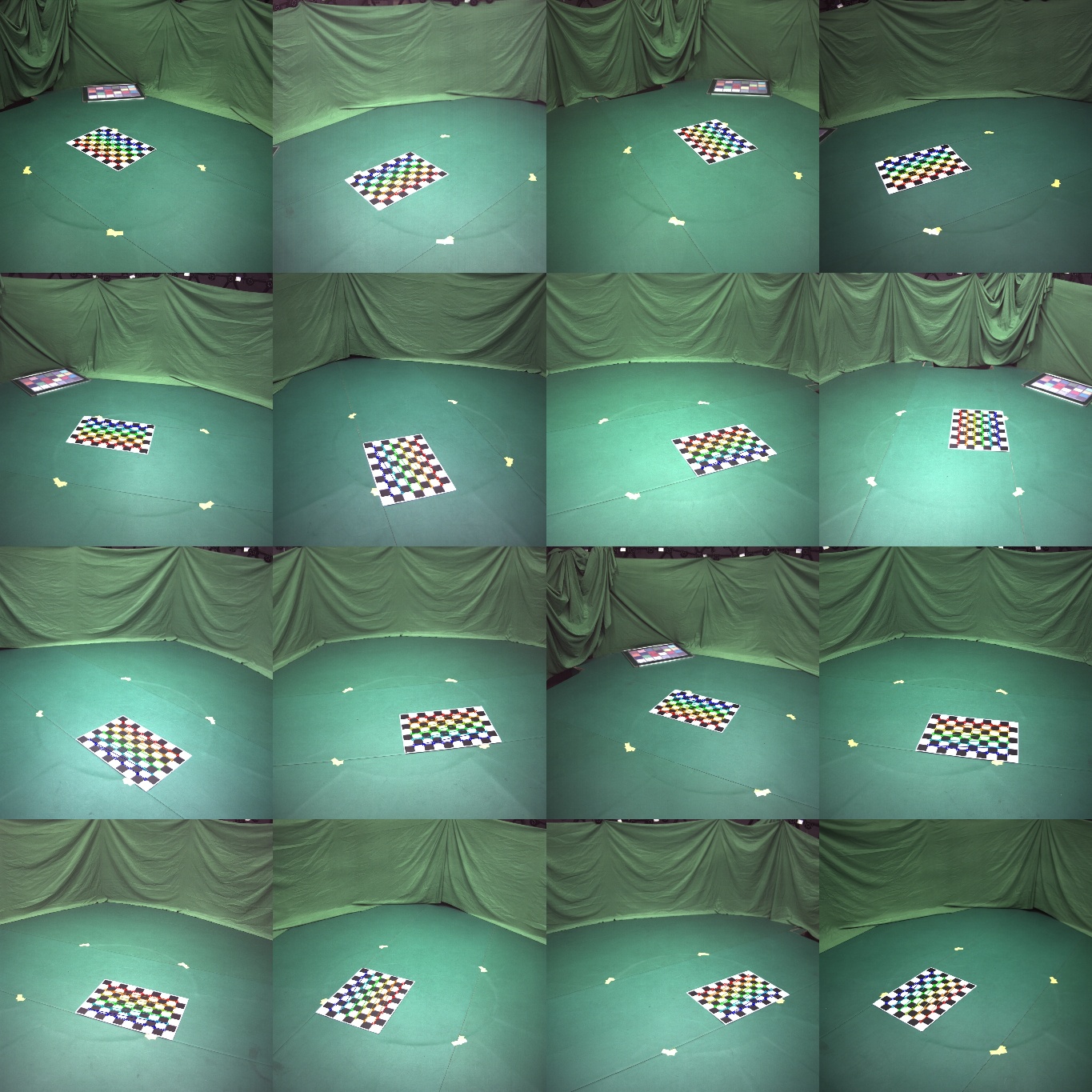

Camera calibration

Calibration for intrinsic and extrinsic parameters

Annotator

Annotator for bounding box, keypoints and mask

Updates

- 12/25/2021: Support mediapipe keypoints detector.

- 08/09/2021: Add a colab demo here.

- 06/28/2021: The Multi-view Multi-person part is released!

- 06/10/2021: The real-time 3D visualization part is released!

- 04/11/2021: The calibration tool and the annotator are released.

- 04/11/2021: Mirrored-Human part is released.

Installation

See doc/install for more instructions.

Acknowledgements

Here are the great works this project is built upon:

- SMPL models and layer are from MPII SMPL-X model.

- Some functions are borrowed from SPIN, VIBE, SMPLify-X

- The method for fitting 3D skeleton and SMPL model is similar to TotalCapture, without using point clouds.

- We integrate some easy-to-use functions for previous great work:

Contact

Please open an issue if you have any questions. We appreciate all contributions to improve our project.

Contributor

EasyMocap is built by researchers from the 3D vision group of Zhejiang University: Qing Shuai, Qi Fang, Junting Dong, Sida Peng, Di Huang, Hujun Bao, and Xiaowei Zhou.

We would like to thank Wenduo Feng, Di Huang, Yuji Chen, Hao Xu, Qing Shuai, Qi Fang, Ting Xie, Junting Dong, Sida Peng and Xiaopeng Ji who are the performers in the sample data. We would also like to thank all the people who has helped EasyMocap in any way.

Citation

This project is a part of our work iMocap, Mirrored-Human, mvpose and Neural Body

Please consider citing these works if you find this repo is useful for your projects.

@Misc{easymocap,

title = {EasyMoCap - Make human motion capture easier.},

howpublished = {Github},

year = {2021},

url = {https://github.com/zju3dv/EasyMocap}

}

@inproceedings{dong2021fast,

title={Fast and Robust Multi-Person 3D Pose Estimation and Tracking from Multiple Views},

author={Dong, Junting and Fang, Qi and Jiang, Wen and Yang, Yurou and Bao, Hujun and Zhou, Xiaowei},

booktitle={T-PAMI},

year={2021}

}

@inproceedings{dong2020motion,

title={Motion capture from internet videos},

author={Dong, Junting and Shuai, Qing and Zhang, Yuanqing and Liu, Xian and Zhou, Xiaowei and Bao, Hujun},

booktitle={European Conference on Computer Vision},

pages={210--227},

year={2020},

organization={Springer}

}

@inproceedings{peng2021neural,

title={Neural Body: Implicit Neural Representations with Structured Latent Codes for Novel View Synthesis of Dynamic Humans},

author={Peng, Sida and Zhang, Yuanqing and Xu, Yinghao and Wang, Qianqian and Shuai, Qing and Bao, Hujun and Zhou, Xiaowei},

booktitle={CVPR},

year={2021}

}

@inproceedings{fang2021mirrored,

title={Reconstructing 3D Human Pose by Watching Humans in the Mirror},

author={Fang, Qi and Shuai, Qing and Dong, Junting and Bao, Hujun and Zhou, Xiaowei},

booktitle={CVPR},

year={2021}

}

-

Cao, Z., Hidalgo, G., Simon, T., Wei, S.E., Sheikh, Y.: Openpose: real-time multi-person 2d pose estimation using part affinity fields. arXiv preprint arXiv:1812.08008 (2018) ↩

-

Kolotouros, Nikos, et al. “Learning to reconstruct 3D human pose and shape via model-fitting in the loop.” Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019 ↩

-

Bochkovskiy, Alexey, Chien-Yao Wang, and Hong-Yuan Mark Liao. “Yolov4: Optimal speed and accuracy of object detection.” arXiv preprint arXiv:2004.10934 (2020). ↩