reconstruction

Papers with tag reconstruction

2022

- State of the Art in Dense Monocular Non-Rigid 3D ReconstructionEdith Tretschk, Navami Kairanda, Mallikarjun B R, Rishabh Dabral, Adam Kortylewski, Bernhard Egger, Marc Habermann, Pascal Fua, Christian Theobalt, and Vladislav GolyanikIn 2022

3D reconstruction of deformable (or non-rigid) scenes from a set of monocular2D image observations is a long-standing and actively researched area ofcomputer vision and graphics. It is an ill-posed inverse problem,since–without additional prior assumptions–it permits infinitely manysolutions leading to accurate projection to the input 2D images. Non-rigidreconstruction is a foundational building block for downstream applicationslike robotics, AR/VR, or visual content creation. The key advantage of usingmonocular cameras is their omnipresence and availability to the end users aswell as their ease of use compared to more sophisticated camera set-ups such asstereo or multi-view systems. This survey focuses on state-of-the-art methodsfor dense non-rigid 3D reconstruction of various deformable objects andcomposite scenes from monocular videos or sets of monocular views. It reviewsthe fundamentals of 3D reconstruction and deformation modeling from 2D imageobservations. We then start from general methods–that handle arbitrary scenesand make only a few prior assumptions–and proceed towards techniques makingstronger assumptions about the observed objects and types of deformations (e.g.human faces, bodies, hands, and animals). A significant part of this STAR isalso devoted to classification and a high-level comparison of the methods, aswell as an overview of the datasets for training and evaluation of thediscussed techniques. We conclude by discussing open challenges in the fieldand the social aspects associated with the usage of the reviewed methods.

值得一看的单目非刚性重建的综述

monocular reconstruction nonrigid review@inproceedings{2210.15664, title = {State of the Art in Dense Monocular Non-Rigid 3D Reconstruction}, author = {Tretschk, Edith and Kairanda, Navami and R, Mallikarjun B and Dabral, Rishabh and Kortylewski, Adam and Egger, Bernhard and Habermann, Marc and Fua, Pascal and Theobalt, Christian and Golyanik, Vladislav}, year = {2022}, tags = {monocular, reconstruction, nonrigid, review}, } -

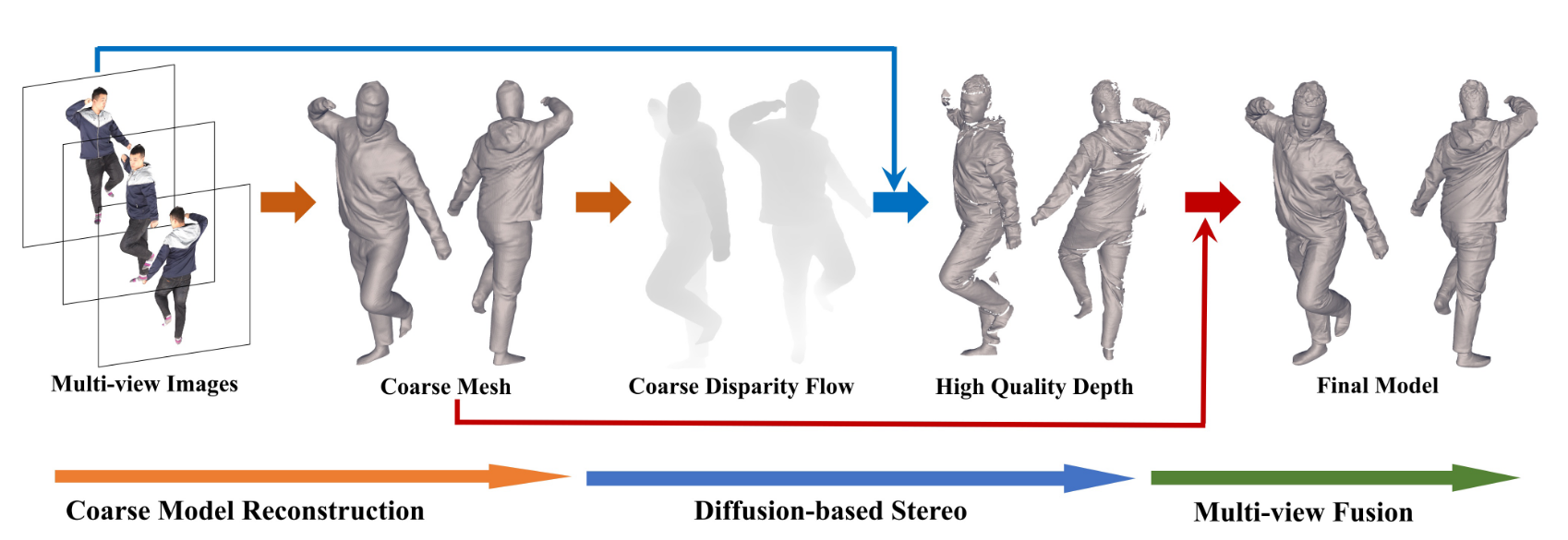

DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse CamerasRuizhi Shao, Zerong Zheng, Hongwen Zhang, Jingxiang Sun, and Yebin LiuIn ECCV 2022

DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse CamerasRuizhi Shao, Zerong Zheng, Hongwen Zhang, Jingxiang Sun, and Yebin LiuIn ECCV 2022We propose DiffuStereo, a novel system using only sparse cameras (8 in thiswork) for high-quality 3D human reconstruction. At its core is a noveldiffusion-based stereo module, which introduces diffusion models, a type ofpowerful generative models, into the iterative stereo matching network. To thisend, we design a new diffusion kernel and additional stereo constraints tofacilitate stereo matching and depth estimation in the network. We furtherpresent a multi-level stereo network architecture to handle high-resolution (upto 4k) inputs without requiring unaffordable memory footprint. Given a set ofsparse-view color images of a human, the proposed multi-level diffusion-basedstereo network can produce highly accurate depth maps, which are then convertedinto a high-quality 3D human model through an efficient multi-view fusionstrategy. Overall, our method enables automatic reconstruction of human modelswith quality on par to high-end dense-view camera rigs, and this is achievedusing a much more light-weight hardware setup. Experiments show that our methodoutperforms state-of-the-art methods by a large margin both qualitatively andquantitatively.

human-reconstruction@inproceedings{DiffuStereo, title = {DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse Cameras}, author = {Shao, Ruizhi and Zheng, Zerong and Zhang, Hongwen and Sun, Jingxiang and Liu, Yebin}, year = {2022}, tags = {human-reconstruction}, booktitle = {ECCV}, }