dynamic-scene

Papers with tag dynamic-scene

2022

-

ParticleNeRF: A Particle-Based Encoding for Online Neural Radiance Fields in Dynamic ScenesJad Abou-Chakra, Feras Dayoub, and Niko SünderhaufIn 2022

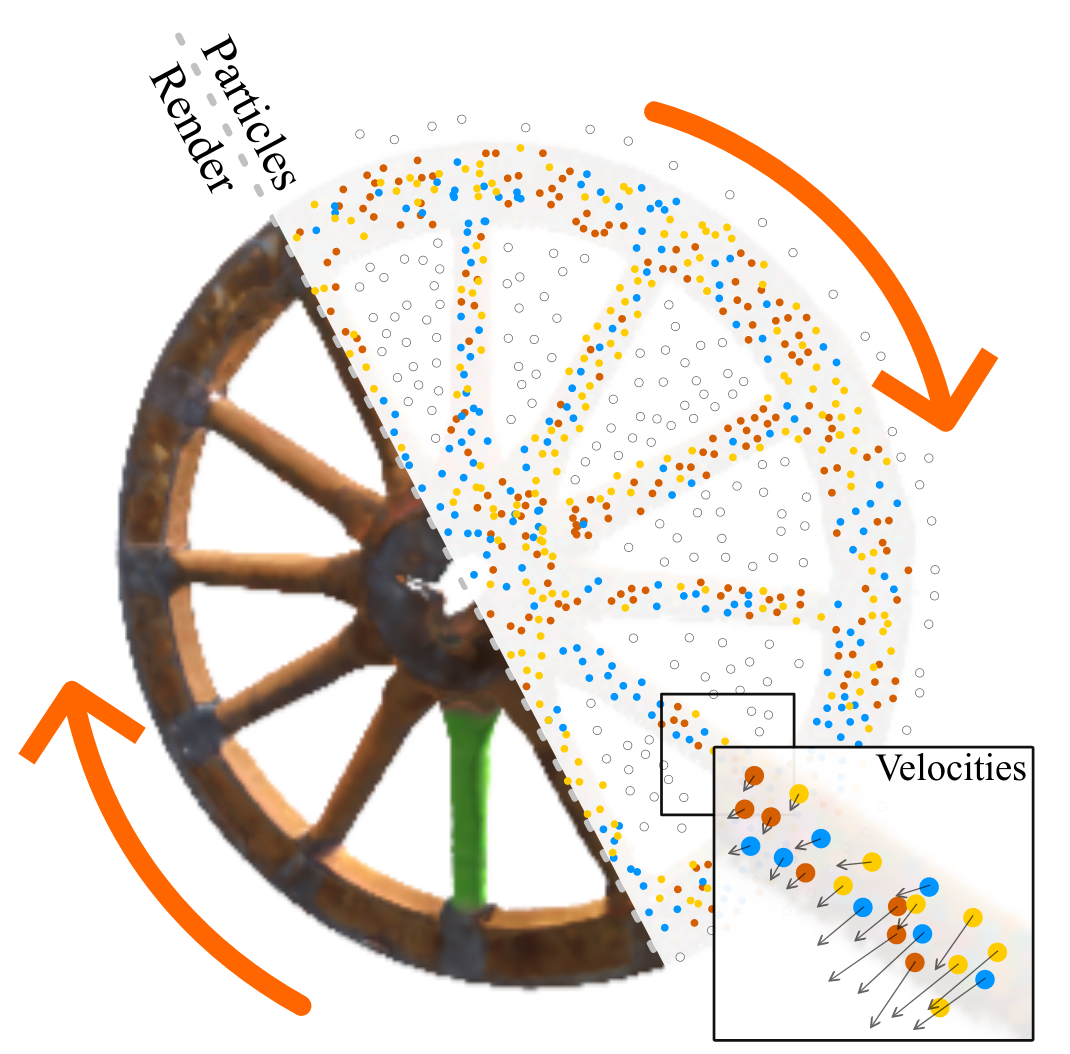

ParticleNeRF: A Particle-Based Encoding for Online Neural Radiance Fields in Dynamic ScenesJad Abou-Chakra, Feras Dayoub, and Niko SünderhaufIn 2022Neural Radiance Fields (NeRFs) learn implicit representations of - typicallystatic - environments from images. Our paper extends NeRFs to handle dynamicscenes in an online fashion. We propose ParticleNeRF that adapts to changes inthe geometry of the environment as they occur, learning a new up-to-daterepresentation every 350 ms. ParticleNeRF can represent the current state ofdynamic environments with much higher fidelity as other NeRF frameworks. Toachieve this, we introduce a new particle-based parametric encoding, whichallows the intermediate NeRF features - now coupled to particles in space - tomove with the dynamic geometry. This is possible by backpropagating thephotometric reconstruction loss into the position of the particles. Theposition gradients are interpreted as particle velocities and integrated intopositions using a position-based dynamics (PBS) physics system. Introducing PBSinto the NeRF formulation allows us to add collision constraints to theparticle motion and creates future opportunities to add other movement priorsinto the system, such as rigid and deformable body

提出了particle-based parametric encoding, 将features anchor在dynamic geometry, 实现view synthesis of dynamic scenes. 用position-based dynamics physics system表示dynamic geometry.

view-synthesis dynamic-scene@inproceedings{ParticleNeRF, title = {ParticleNeRF: A Particle-Based Encoding for Online Neural Radiance Fields in Dynamic Scenes}, author = {Abou-Chakra, Jad and Dayoub, Feras and Sünderhauf, Niko}, year = {2022}, tags = {view-synthesis, dynamic-scene}, sida = {提出了particle-based parametric encoding, 将features anchor在dynamic geometry, 实现view synthesis of dynamic scenes. 用position-based dynamics physics system表示dynamic geometry.}, }

2021

-

Temporal-MPI: Enabling Multi-Plane Images for Dynamic Scene Modelling via Temporal Basis LearningWenpeng Xing, and Jie ChenIn 2021

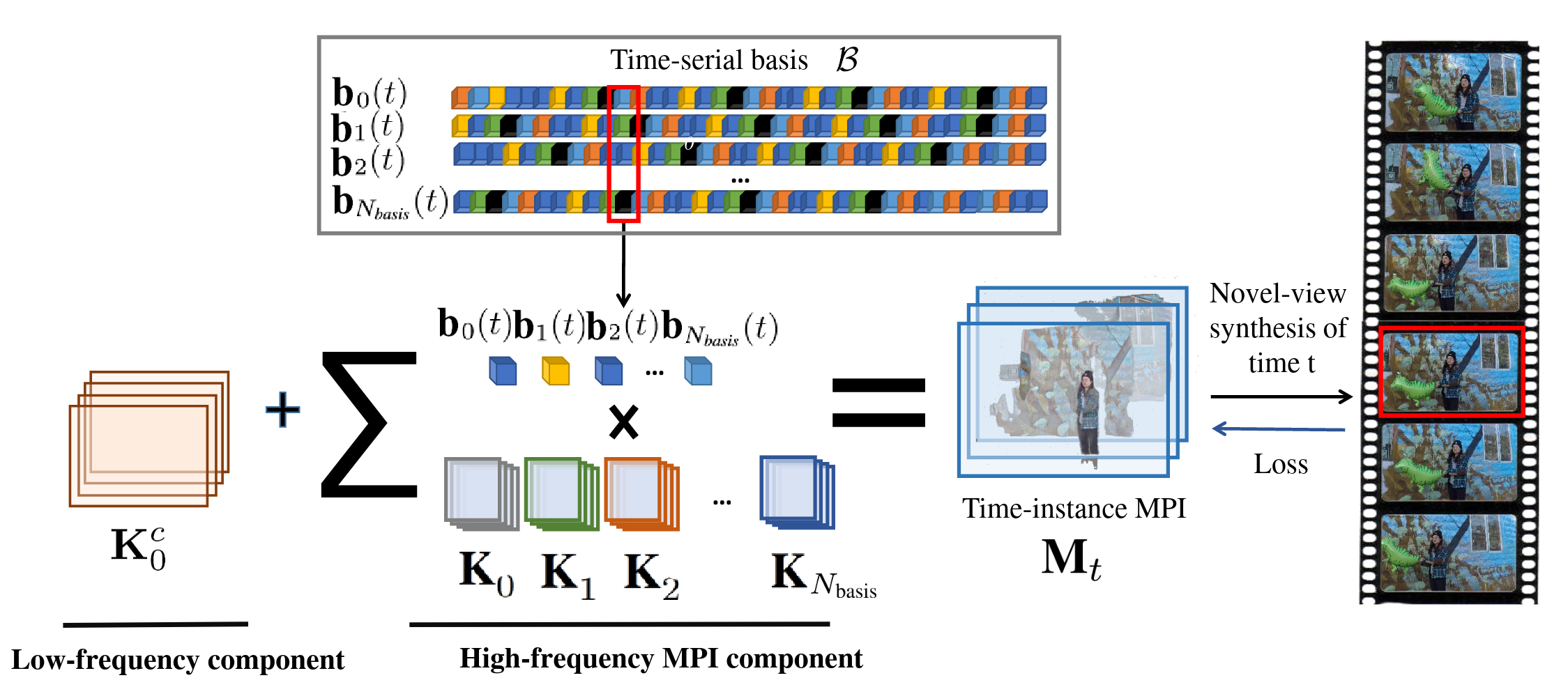

Temporal-MPI: Enabling Multi-Plane Images for Dynamic Scene Modelling via Temporal Basis LearningWenpeng Xing, and Jie ChenIn 2021Novel view synthesis of static scenes has achieved remarkable advancements inproducing photo-realistic results. However, key challenges remain for immersiverendering of dynamic scenes. One of the seminal image-based rendering method,the multi-plane image (MPI), produces high novel-view synthesis quality forstatic scenes. But modelling dynamic contents by MPI is not studied. In thispaper, we propose a novel Temporal-MPI representation which is able to encodethe rich 3D and dynamic variation information throughout the entire video ascompact temporal basis and coefficients jointly learned. Time-instance MPI forrendering can be generated efficiently using mini-seconds by linearcombinations of temporal basis and coefficients from Temporal-MPI. Thusnovel-views at arbitrary time-instance will be able to be rendered viaTemporal-MPI in real-time with high visual quality. Our method is trained andevaluated on Nvidia Dynamic Scene Dataset. We show that our proposed Temporal-MPI is much faster and more compact compared with other state-of-the-artdynamic scene modelling methods.

构建一组MPI basis和coefficients, 通过linear combinations得到每一帧的MPI, 实现dynamic view synthesis.

view-synthesis dynamic-scene@inproceedings{Temporal-MPI, title = {Temporal-MPI: Enabling Multi-Plane Images for Dynamic Scene Modelling via Temporal Basis Learning}, author = {Xing, Wenpeng and Chen, Jie}, year = {2021}, tags = {view-synthesis, dynamic-scene}, sida = {构建一组MPI basis和coefficients, 通过linear combinations得到每一帧的MPI, 实现dynamic view synthesis.}, }