nerf

Papers with tag nerf

2022

- nerf2nerf: Pairwise Registration of Neural Radiance FieldsLily Goli, Daniel Rebain, Sara Sabour, Animesh Garg, and Andrea TagliasacchiIn 2022

We introduce a technique for pairwise registration of neural fields thatextends classical optimization-based local registration (i.e. ICP) to operateon Neural Radiance Fields (NeRF) – neural 3D scene representations trainedfrom collections of calibrated images. NeRF does not decompose illumination andcolor, so to make registration invariant to illumination, we introduce theconcept of a ”surface field” – a field distilled from a pre-trained NeRFmodel that measures the likelihood of a point being on the surface of anobject. We then cast nerf2nerf registration as a robust optimization thatiteratively seeks a rigid transformation that aligns the surface fields of thetwo scenes. We evaluate the effectiveness of our technique by introducing adataset of pre-trained NeRF scenes – our synthetic scenes enable quantitativeevaluations and comparisons to classical registration techniques, while ourreal scenes demonstrate the validity of our technique in real-world scenarios.Additional results available at: https://nerf2nerf.github.io

Registration of neural radiance fields. 从nerf中提取surface fields, 然后求解两个surface fields的rigid transformation.

registration nerf@inproceedings{nerf2nerf, title = {nerf2nerf: Pairwise Registration of Neural Radiance Fields}, author = {Goli, Lily and Rebain, Daniel and Sabour, Sara and Garg, Animesh and Tagliasacchi, Andrea}, year = {2022}, tags = {registration, nerf}, sida = {Registration of neural radiance fields. 从nerf中提取surface fields, 然后求解两个surface fields的rigid transformation.}, } -

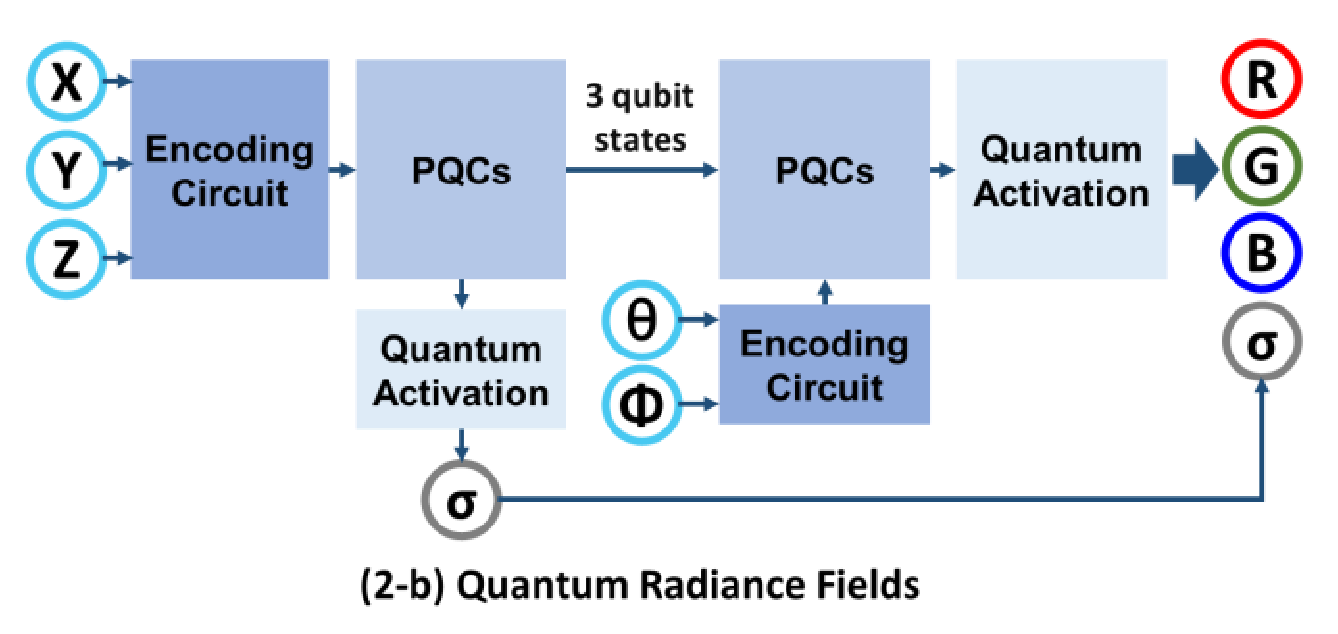

QRF: Implicit Neural Representations with Quantum Radiance FieldsYuanFu Yang, and Min SunIn 2022

QRF: Implicit Neural Representations with Quantum Radiance FieldsYuanFu Yang, and Min SunIn 2022Photorealistic rendering of real-world scenes is a tremendous challenge witha wide range of applications, including MR (Mixed Reality), and VR (MixedReality). Neural networks, which have long been investigated in the context ofsolving differential equations, have previously been introduced as implicitrepresentations for Photorealistic rendering. However, realistic renderingusing classic computing is challenging because it requires time-consumingoptical ray marching, and suffer computational bottlenecks due to the curse ofdimensionality. In this paper, we propose Quantum Radiance Fields (QRF), whichintegrate the quantum circuit, quantum activation function, and quantum volumerendering for implicit scene representation. The results indicate that QRF notonly takes advantage of the merits of quantum computing technology such as highspeed, fast convergence, and high parallelism, but also ensure high quality ofvolume rendering.

通过quantum techniques加速nerf.

nerf-speed@inproceedings{QRF, title = {QRF: Implicit Neural Representations with Quantum Radiance Fields}, author = {Yang, YuanFu and Sun, Min}, year = {2022}, tags = {nerf-speed}, sida = {通过quantum techniques加速nerf.}, }

2021

-

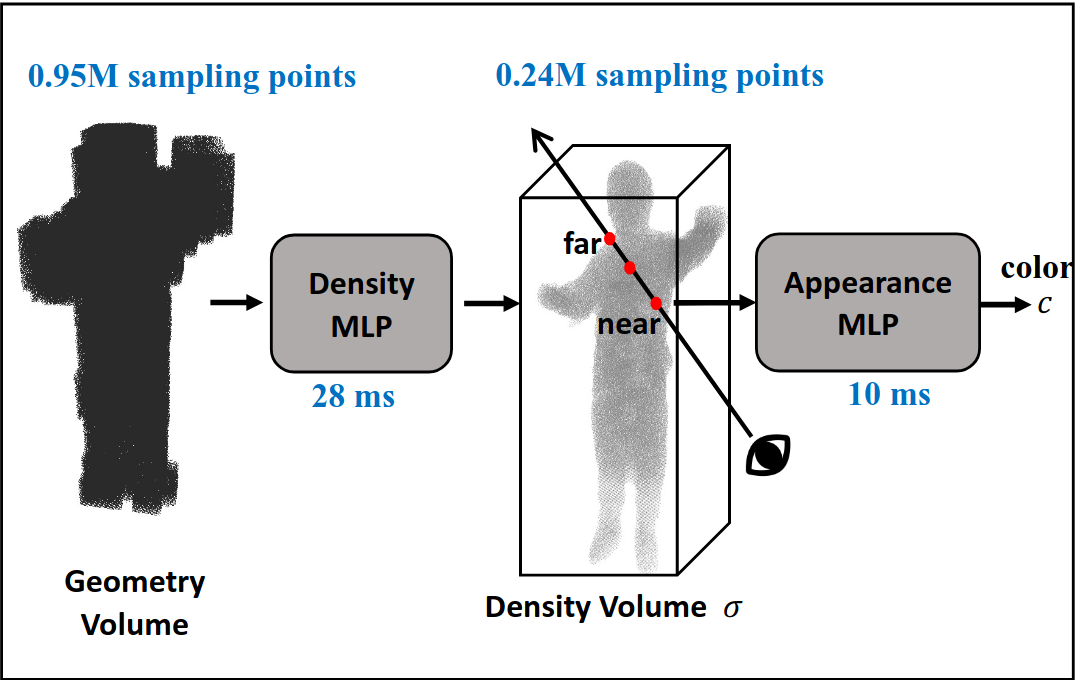

Geometry-Guided Progressive NeRF for Generalizable and Efficient Neural Human RenderingMingfei Chen, Jianfeng Zhang, Xiangyu Xu, Lijuan Liu, Yujun Cai, Jiashi Feng, and Shuicheng YanIn ECCV 2021

Geometry-Guided Progressive NeRF for Generalizable and Efficient Neural Human RenderingMingfei Chen, Jianfeng Zhang, Xiangyu Xu, Lijuan Liu, Yujun Cai, Jiashi Feng, and Shuicheng YanIn ECCV 2021In this work we develop a generalizable and efficient Neural Radiance Field(NeRF) pipeline for high-fidelity free-viewpoint human body synthesis undersettings with sparse camera views. Though existing NeRF-based methods cansynthesize rather realistic details for human body, they tend to produce poorresults when the input has self-occlusion, especially for unseen humans undersparse views. Moreover, these methods often require a large number of samplingpoints for rendering, which leads to low efficiency and limits their real-worldapplicability. To address these challenges, we propose a Geometry-guidedProgressive NeRF (GP-NeRF). In particular, to better tackle self-occlusion, wedevise a geometry-guided multi-view feature integration approach that utilizesthe estimated geometry prior to integrate the incomplete information from inputviews and construct a complete geometry volume for the target human body.Meanwhile, for achieving higher rendering efficiency, we introduce aprogressive rendering pipeline through geometry guidance, which leverages thegeometric feature volume and the predicted density values to progressivelyreduce the number of sampling points and speed up the rendering process.Experiments on the ZJU-MoCap and THUman datasets show that our methodoutperforms the state-of-the-arts significantly across multiple generalizationsettings, while the time cost is reduced > 70% via applying our efficientprogressive rendering pipeline.

Geometry-guided image feature integration获得density volume,减少采样的点的数量

human-nerf accelerate@inproceedings{GGNerf, title = {Geometry-Guided Progressive NeRF for Generalizable and Efficient Neural Human Rendering}, author = {Chen, Mingfei and Zhang, Jianfeng and Xu, Xiangyu and Liu, Lijuan and Cai, Yujun and Feng, Jiashi and Yan, Shuicheng}, year = {2021}, tags = {human-nerf, accelerate}, booktitle = {ECCV}, }